Data teams today face a tough choice between data lakes and data warehouses. Each option has big drawbacks that slow down work and increase costs. Data lakes store lots of different data types cheaply but lack the reliability and structure needed for business decisions.

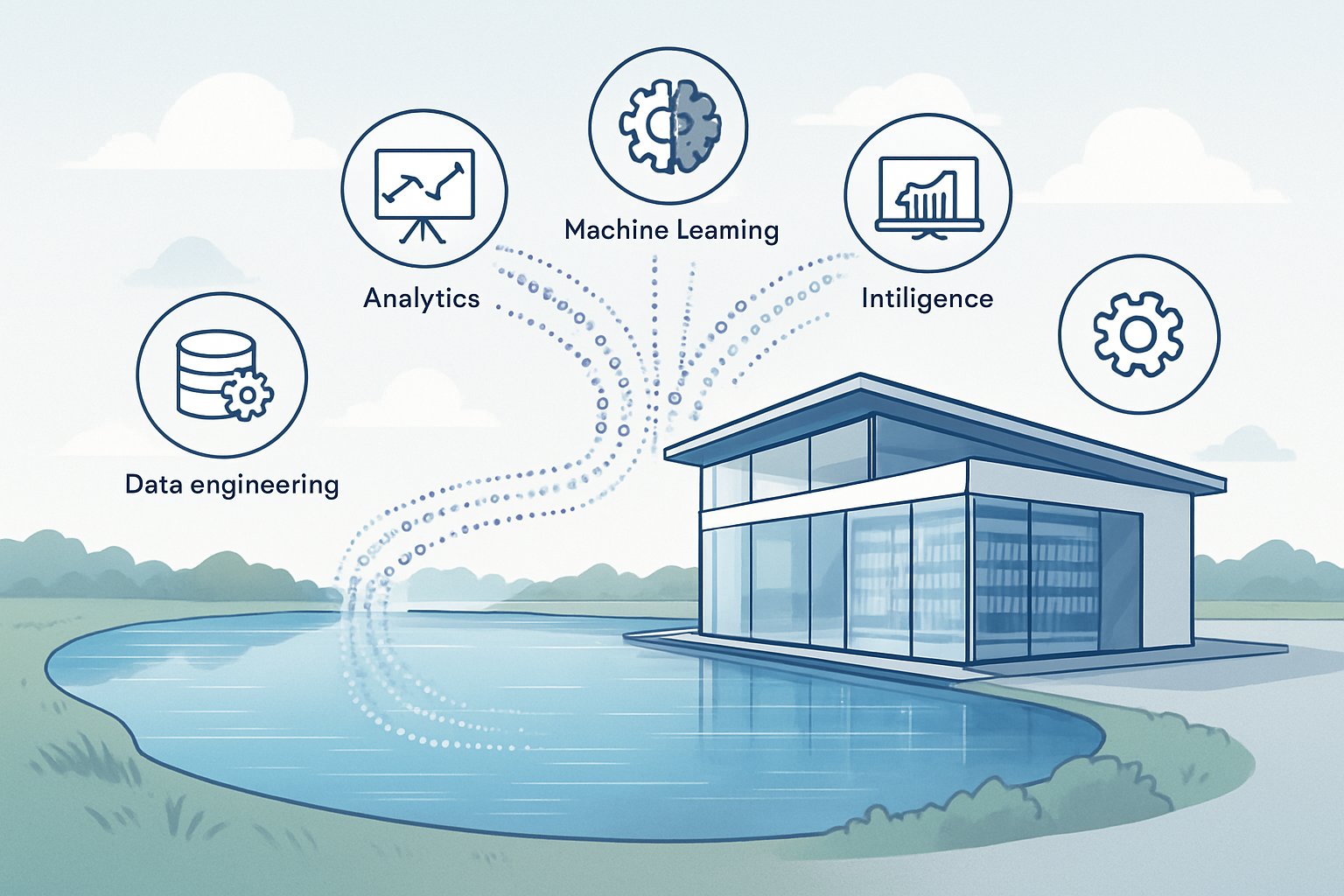

A lakehouse combines the low-cost storage of data lakes with the performance and reliability of data warehouses into one unified system. This new approach lets companies store all their data in one place while supporting everything from basic reports to advanced AI projects. The lakehouse handles structured spreadsheet data and unstructured content like images and videos equally well.

Organizations using lakehouse systems can run faster analytics, reduce data copying between systems, and support both traditional business intelligence and modern machine learning. This guide will walk through how lakehouse technology works, the key platforms available, and real-world ways companies use this approach to solve data challenges.

Key Takeaways

- Lakehouses merge data lake storage benefits with data warehouse performance and governance features

- The architecture supports diverse workloads from business reports to machine learning on a single platform

- Implementation requires careful planning around data organization, quality management, and platform selection

Defining the Lakehouse: Core Concepts and Advantages

A data lakehouse combines the flexible storage of data lakes with the powerful analytics of data warehouses. This unified approach lets companies store all types of data in one place while keeping strong data management features.

What Sets a Lakehouse Apart

A lakehouse uses object storage as its foundation, similar to data lakes. But it adds a special metadata layer on top that brings structure to raw data.

This metadata layer tracks data quality, manages schemas, and handles ACID transactions. These features were only found in traditional data warehouses before.

The architecture supports both structured and unstructured data in the same system. Users can run SQL queries on raw files just like they would on database tables.

Open table formats like Delta Lake, Apache Iceberg, and Apache Hudi make this possible. These formats add database-like features to files stored in cloud storage.

Unlike data warehouses, lakehouses don’t require expensive proprietary hardware. They run on commodity cloud storage and compute resources.

Benefits Over Data Warehouses and Data Lakes

Lakehouses solve major problems that exist with separate data warehouse and data lake systems.

Cost savings come from using cheap object storage instead of expensive warehouse storage. Companies can store much more data for less money.

Data lakes often become data swamps with poor quality control. Lakehouses fix this with built-in data governance and quality checks.

Data warehouses struggle with unstructured data like images, videos, and documents. Lakehouses handle all data types without conversion.

Faster time-to-insight happens because data doesn’t need to move between systems. Analytics teams can work directly on the source data.

Machine learning workloads benefit from direct access to raw data. Data scientists don’t have to extract and transform data first.

Unified Data Platform Approach

The unified platform eliminates the need for multiple data systems. Companies can replace their data warehouse and data lake with one lakehouse.

Single source of truth means all teams work with the same data. This reduces confusion and improves decision-making across the organization.

Different tools can connect to the same lakehouse storage. Business intelligence tools, machine learning platforms, and analytics software all work together.

Simplified data architecture reduces maintenance costs and complexity. IT teams manage one system instead of several separate ones.

Real-time and batch processing happen on the same platform. Companies can analyze streaming data alongside historical data without moving it.

The approach supports both self-service analytics and governed data access. Business users get the flexibility they need while IT maintains control.

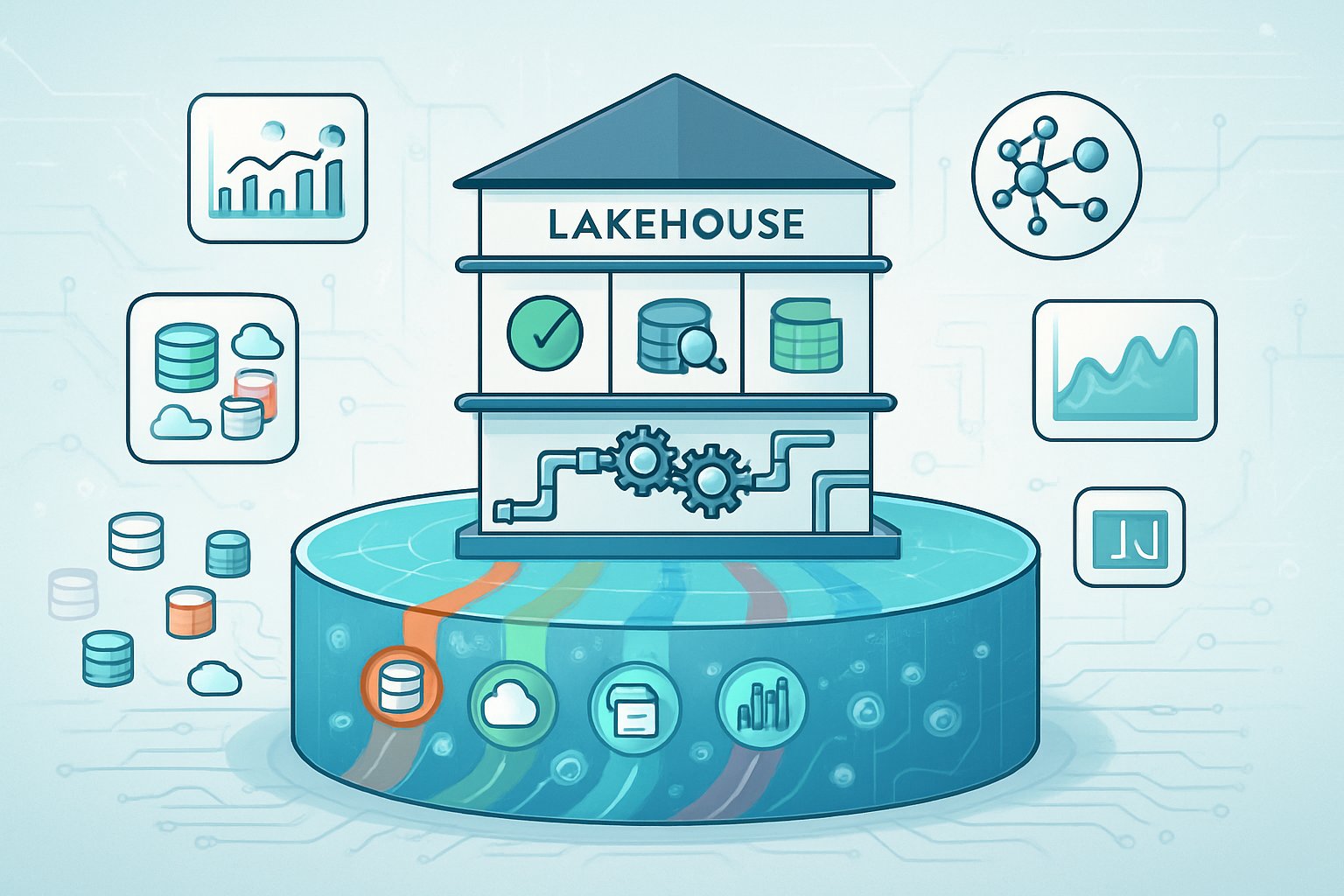

Lakehouse Architecture: Components and Design

A lakehouse architecture combines distinct layers and components that work together to deliver both flexibility and performance. The design separates storage from compute while maintaining strong governance through metadata management and open data formats.

Layered Breakdown of Lakehouse Architecture

Data lakehouse architecture follows a medallion structure with three main layers. Each layer serves a specific purpose in data processing and refinement.

The bronze layer stores raw data in its original format. This layer ingests data from various sources without transformation. Files remain in native formats like JSON, CSV, or Parquet.

The silver layer contains cleaned and validated data. This layer removes duplicates and applies basic transformations. Schema enforcement begins at this stage to ensure data quality.

The gold layer holds highly refined, business-ready data. This layer aggregates and structures data for analytics and reporting. Data here follows strict schema requirements and business rules.

Each layer builds upon the previous one. Organizations can access data at any layer based on their needs. This approach supports both raw data exploration and structured analytics.

Storage and Compute Separation

Modern lakehouse designs separate storage and compute resources completely. This separation allows independent scaling of each component based on workload demands.

Object storage forms the foundation of lakehouse architecture. Cloud storage services like Amazon S3 or Azure Data Lake provide unlimited capacity. Object stores handle petabytes of data cost-effectively.

Compute engines connect to storage when processing is needed. Multiple engines can access the same data simultaneously. This includes Spark clusters, SQL engines, and machine learning platforms.

The separation eliminates data silos and reduces costs. Organizations pay for storage and compute independently. They can scale compute resources up during heavy processing periods and down during quiet times.

Metadata and Governance Layers

Metadata management tracks data lineage, schema, and access patterns across the lakehouse. This layer maintains a catalog of all datasets and their relationships.

Data governance enforces policies and standards throughout the architecture. Access control mechanisms determine who can view or modify specific datasets. Role-based permissions protect sensitive information.

Schema evolution allows data structures to change over time without breaking existing processes. The metadata layer tracks these changes and maintains compatibility. Applications can adapt to new schema versions automatically.

Governance tools monitor data quality and compliance requirements. They flag issues like missing data or policy violations. Automated alerts notify administrators of problems requiring attention.

Open Formats and Interoperability

Open formats like Parquet and Delta Lake enable tool interoperability across the lakehouse. These formats work with multiple processing engines without vendor lock-in.

Parquet provides efficient columnar storage for analytics workloads. It compresses data well and supports fast query performance. Most modern tools can read Parquet files natively.

Delta Lake adds ACID transactions and time travel capabilities to Parquet. It handles concurrent reads and writes safely. Schema enforcement prevents corrupted data from entering tables.

ELT processes work seamlessly with open formats. Data engineers can extract and load data first, then transform it using various tools. This flexibility supports different processing approaches and team preferences.

Key Lakehouse Technologies and Platforms

Several core technologies enable lakehouse architecture to work effectively. These include table formats like Delta Lake and Apache Iceberg, cloud platforms from major providers, and integration tools that connect different storage systems.

Delta Lake and Its Ecosystem

Delta Lake provides ACID transactions and metadata management for data stored in cloud object storage. It works as a storage layer that sits on top of existing data lakes to add reliability and performance features.

Key Delta Lake features include:

- Time travel capabilities for data versioning

- Schema enforcement and evolution

- Automatic data optimization

- Support for streaming and batch processing

Databricks originally developed Delta Lake as an open-source project. The technology now supports multiple programming languages including Python, Scala, and SQL.

Delta Lake stores transaction logs alongside data files. This approach allows multiple users to read and write data at the same time without conflicts.

Apache Iceberg and Apache Hudi

Apache Iceberg offers another table format for lakehouse architectures. It provides similar features to Delta Lake but uses a different technical approach for metadata management.

Iceberg supports schema evolution and hidden partitioning. These features help organizations manage changing data structures over time.

Apache Hudi focuses on three main use cases:

- Incremental data processing

- Data lake ingestion

- Real-time analytics

Hudi provides copy-on-write and merge-on-read storage types. Users can choose the option that best fits their performance needs.

Both technologies work with popular query engines like Apache Spark and Presto. This compatibility gives organizations flexibility in their tool choices.

Leading Solutions: Databricks Lakehouse and Microsoft Fabric

The Databricks Lakehouse Platform combines compute and storage in a unified environment. It includes built-in support for machine learning, data engineering, and business intelligence workflows.

Azure Databricks extends the platform with Microsoft cloud services. Users get integration with Azure Active Directory and other Microsoft tools.

Databricks offers several key components:

- Delta Lake for data storage

- MLflow for machine learning lifecycle management

- Unity Catalog for data governance

- Collaborative notebooks for development

Microsoft Fabric Lakehouse provides an alternative approach within the Microsoft ecosystem. It connects with Power BI for reporting and Azure Synapse for analytics.

Fabric uses OneLake as its storage foundation. This creates a single data estate across different Microsoft services.

Integration with Cloud Object Stores

Lakehouse platforms build on top of existing cloud storage services. Amazon S3, Azure Data Lake Storage, and Google Cloud Storage provide the foundation layer.

Cloud object stores offer low-cost storage for large data volumes. They support both structured and unstructured data formats.

Integration benefits include:

- Separation of compute and storage costs

- Ability to scale storage independently

- Support for multiple data formats

- Integration with existing cloud services

Organizations can connect lakehouse platforms to Amazon Redshift or Google BigQuery for specific workloads. This approach allows companies to use specialized tools while maintaining a unified data architecture.

The separation of storage and compute allows teams to spin up processing power only when needed. This reduces costs compared to traditional data warehouse approaches.

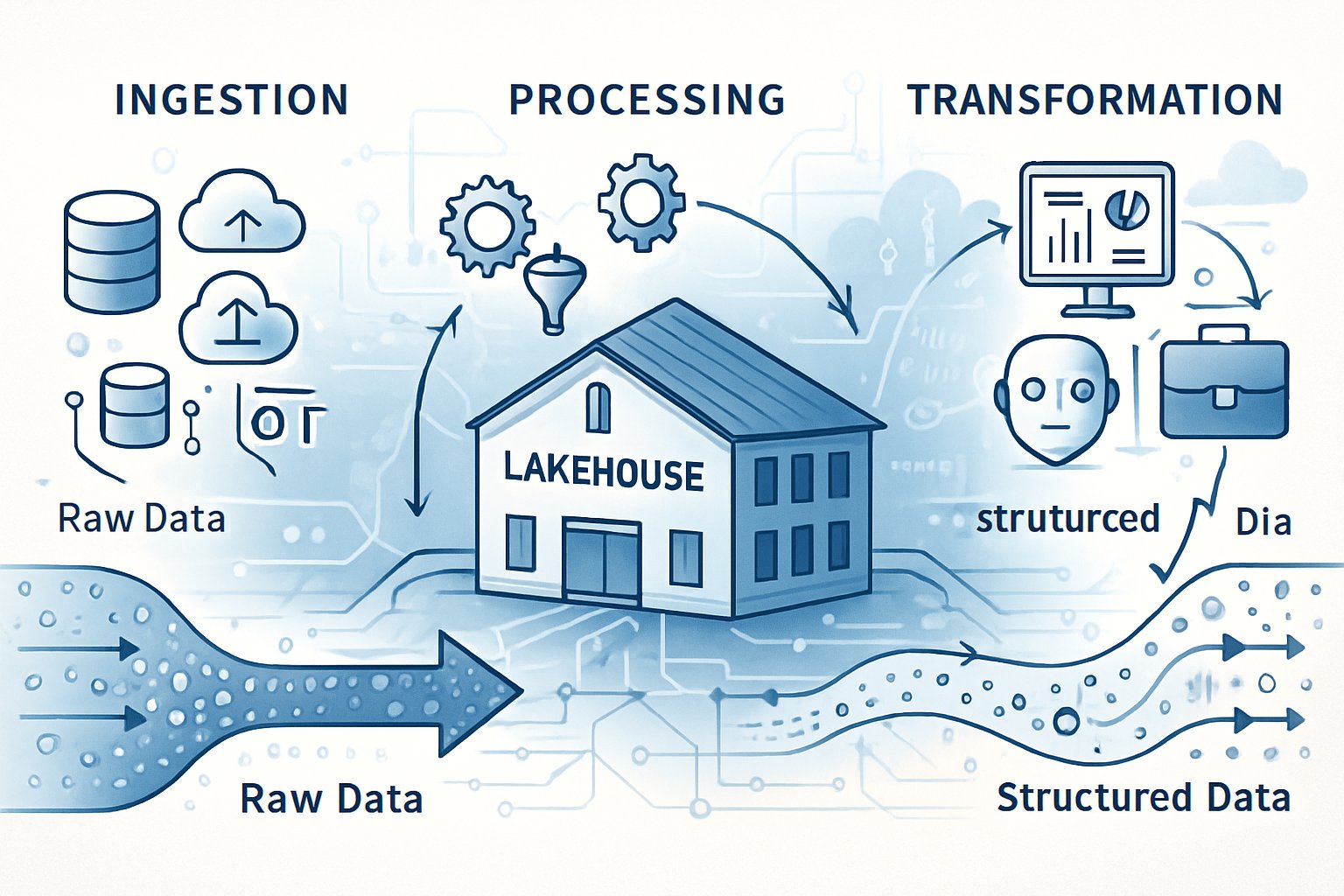

Data Organization: Ingestion, Processing, and Transformation

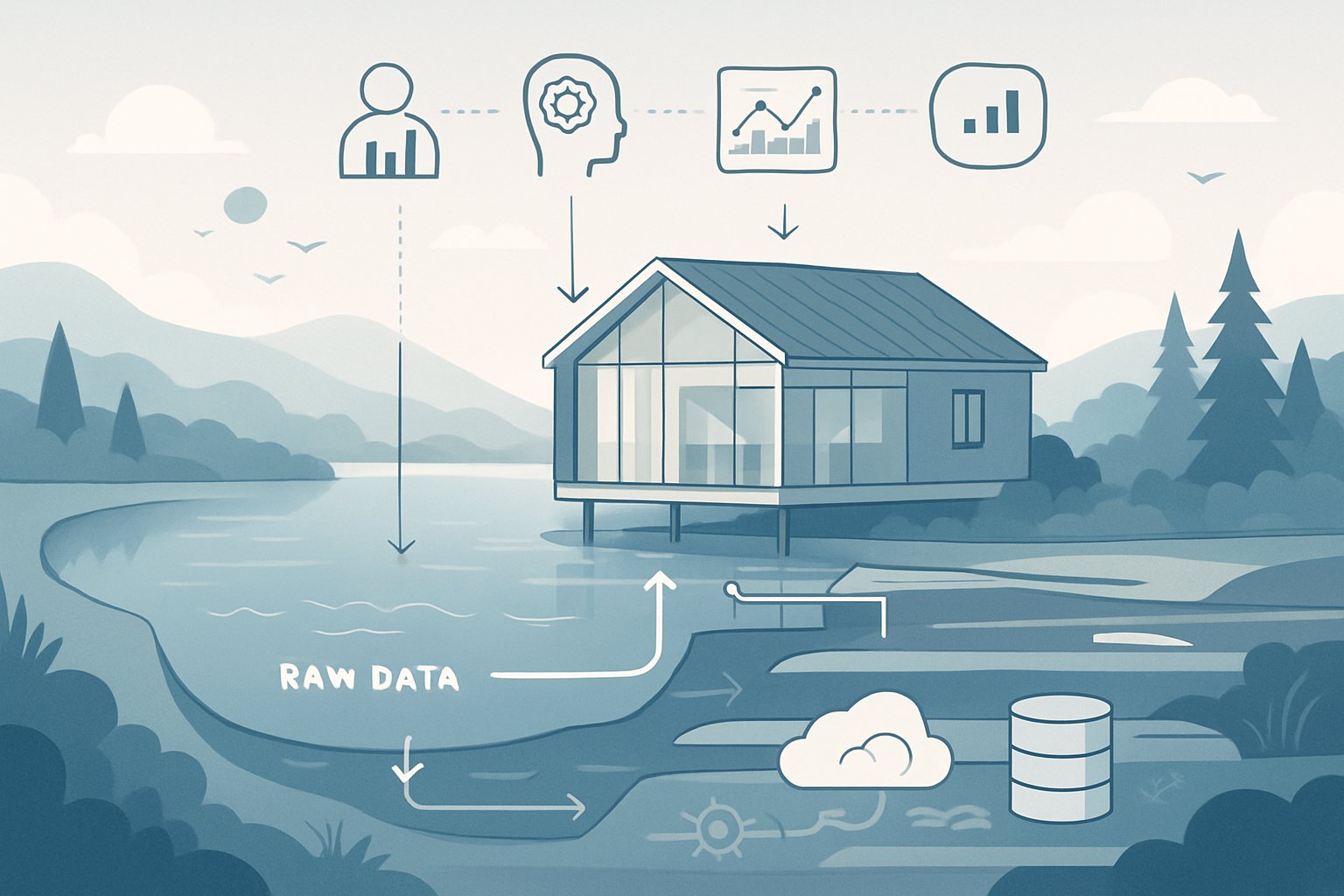

Data lakehouses handle diverse data through flexible ingestion patterns, support both batch and streaming pipelines, and offer multiple transformation approaches including ETL, ELT, and change data capture methods.

Data Sources and Ingestion Patterns

Data ingestion forms the foundation of lakehouse architecture. Organizations pull data from multiple sources including databases, APIs, file systems, and streaming platforms.

Common data sources include:

- Relational databases (MySQL, PostgreSQL, Oracle)

- NoSQL databases (MongoDB, Cassandra)

- SaaS applications (Salesforce, HubSpot)

- File systems and cloud storage

- IoT devices and sensors

The ingestion layer extracts data from these sources using different patterns. Pull-based ingestion schedules regular data extraction. Push-based ingestion receives data as it becomes available.

Apache Kafka serves as a popular streaming platform for real-time data ingestion. It handles high-volume data streams from multiple sources simultaneously.

Cloud platforms offer managed ingestion services. AWS Glue provides serverless data integration for extracting and loading data into lakehouses.

Batch and Real-Time Data Pipelines

Data pipelines move and process information through the lakehouse system. Organizations choose between batch processing and real-time processing based on business needs.

Batch processing handles large volumes of data at scheduled intervals. This approach works well for daily reports, historical analysis, and non-urgent transformations.

Real-time data processing handles streaming data as it arrives. Financial trading systems and fraud detection require immediate processing of incoming data.

Many organizations use hybrid approaches. Critical data flows through real-time pipelines while less urgent data uses batch processing.

Pipeline characteristics:

| Type | Latency | Volume | Use Cases |

|---|---|---|---|

| Batch | Hours to days | Very high | Reports, analytics |

| Real-time | Seconds to minutes | Medium to high | Monitoring, alerts |

| Micro-batch | Minutes | High | Near real-time analytics |

ETL, ELT, and Change Data Capture Approaches

Data transformation happens through different methods in lakehouse environments. Each approach offers specific advantages for different scenarios.

ETL (Extract, Transform, Load) transforms data before loading it into storage. This traditional approach ensures data quality but requires more processing power upfront.

ELT (Extract, Load, Transform) loads raw data first and transforms it later. Modern lakehouses favor ELT because they can store unlimited raw data cheaply.

Batch ETL processes large datasets at scheduled times. Monthly financial reports often use batch ETL to process all transactions together.

Change Data Capture (CDC) tracks changes in source systems and replicates only modified records. This reduces processing overhead and keeps data current.

CDC captures inserts, updates, and deletes from source databases. The lakehouse applies these changes to maintain synchronized data copies.

Organizations often combine these approaches. Critical business data uses CDC for real-time updates while historical data uses batch ETL processing.

Managing Diverse Data Types and Ensuring Data Quality

A lakehouse architecture handles multiple data formats while maintaining high standards for accuracy and organization. The system uses advanced cataloging tools and metadata layers to track all information assets across the platform.

Structured, Semi-Structured, and Unstructured Data

Structured data includes traditional database tables with fixed schemas. This data fits into rows and columns with clear relationships. Examples include customer records, sales transactions, and inventory counts.

Semi-structured data contains some organization but lacks rigid formatting. JSON files, XML documents, and CSV files fall into this category. They have identifiable patterns but allow for flexibility in their structure.

Unstructured data has no predefined format or organization. This includes text documents, images, videos, audio files, and social media posts. These files require special processing to extract meaningful insights.

The lakehouse stores all three types in their native formats. It uses different processing engines for each data type. Structured data gets handled by SQL engines while unstructured data uses machine learning tools.

Data Quality, Integrity, and Cataloging

Data quality measures how accurate and complete information remains across the system. The lakehouse runs automatic checks to find missing values, duplicates, and errors. These processes flag problems before they affect business decisions.

Data integrity ensures information stays consistent and trustworthy over time. The system tracks changes and prevents unauthorized modifications. It maintains backup copies and version history for all datasets.

A data catalog acts like a library system for all stored information. It helps users find relevant datasets quickly. The catalog includes descriptions, tags, and usage statistics for each data source.

Data discovery tools scan the lakehouse to identify new datasets automatically. They create profiles showing data patterns and relationships. Users can search by keywords, data types, or business terms.

Schema Management and Metadata

The metadata layer stores information about data structure, location, and usage patterns. It tracks column names, data types, file sizes, and creation dates. This layer helps users understand what each dataset contains.

Unity Catalog provides centralized governance across the entire lakehouse. It manages user permissions and access controls. The system ensures only authorized people can view or modify sensitive information.

Schema evolution allows data structures to change without breaking existing applications. New columns can be added while maintaining compatibility with older queries. The system handles these changes automatically.

Metadata includes business glossaries that define technical terms. These help teams understand data meaning and context. The system also tracks data lineage showing how information flows between different sources.

Analytics, BI, and Advanced Use-Cases

Data lakehouses excel at supporting both traditional business intelligence workflows and modern machine learning pipelines. Organizations can run SQL queries for reporting while simultaneously training models on the same data platform.

Business Intelligence and SQL Analytics

Business intelligence teams can connect their existing BI tools directly to lakehouse data without moving or copying files. Popular tools like Tableau, Power BI, and Looker work with lakehouse platforms through standard SQL interfaces.

SQL analytics in a lakehouse environment supports complex queries across structured and semi-structured data. Users can join sales data with customer logs or product reviews in a single query. This flexibility reduces the time spent preparing data for analysis.

The lakehouse stores data in open formats like Parquet and Delta Lake. These formats optimize query performance while maintaining compatibility with various analytics tools. Business analysts can access the same data that data scientists use for machine learning projects.

Key BI capabilities include:

- Direct SQL querying without data movement

- Support for complex joins across data types

- Integration with existing BI tools

- Optimized storage formats for fast queries

Real-Time Insights and Dashboards

Real-time analytics capabilities allow organizations to monitor business metrics as events happen. Streaming data from websites, mobile apps, and IoT devices flows directly into the lakehouse for immediate analysis.

Dashboards can display live metrics like website traffic, sales performance, or system health. These real-time insights help teams respond quickly to changing conditions or emerging problems.

The lakehouse architecture handles both batch and streaming data in the same platform. Teams can combine historical trends with current data to create comprehensive dashboards. This unified approach eliminates the complexity of managing separate systems for different data speeds.

Real-time inference becomes possible when machine learning models run directly on streaming data. Organizations can detect fraud, recommend products, or trigger alerts within seconds of receiving new information.

Enabling Data Science and Machine Learning

Data science teams benefit from having all organizational data available in one platform. They can explore datasets without requesting data exports or waiting for IT teams to provision new systems.

Machine learning workflows integrate seamlessly with lakehouse storage. Popular frameworks like MLflow track experiments, manage model versions, and handle deployment processes. Data scientists can move from exploration to production without changing platforms.

Feature stores within the lakehouse environment help teams share and reuse machine learning features. These centralized repositories prevent duplicate work and ensure consistency across different models and teams.

ML capabilities include:

- Native support for Python and R

- Integration with MLflow for experiment tracking

- Automated feature engineering pipelines

- Version control for datasets and models

Operational Analytics and Model Serving

Model serving infrastructure allows trained machine learning models to make predictions on new data automatically. The lakehouse platform can host models and serve results through APIs or batch processes.

Operational analytics monitor both business metrics and system performance. Teams can track model accuracy, data quality, and platform health from unified dashboards. This monitoring helps maintain reliable analytics operations.

The platform supports different serving patterns based on business needs. Real-time inference handles immediate prediction requests, while batch scoring processes large datasets on scheduled intervals. Both approaches use the same underlying data and models.

Organizations can implement automated retraining pipelines that update models when new data arrives. This capability ensures that machine learning systems stay current with changing business conditions and data patterns.

Lakehouse Adoption: Implementation and Best Practices

Successful lakehouse adoption requires careful planning around deployment options, cost management, and governance frameworks. Organizations must balance technical requirements with business objectives while ensuring scalable and secure implementations.

Deployment Considerations and Managed Services

Organizations can choose between self-managed and fully managed services when implementing lakehouse architecture. Managed services like Azure Databricks and AWS Lake Formation reduce operational overhead by handling infrastructure management automatically.

Serverless compute options eliminate the need for cluster management. These services automatically scale resources based on workload demands. Users only pay for actual compute time used during data processing tasks.

Cloud providers offer pre-built integrations with storage services like Amazon S3 and Azure Data Lake Storage. These connections simplify data ingestion from multiple sources. The managed approach also includes automatic software updates and security patches.

Self-managed deployments provide more control over configurations and customizations. However, they require dedicated teams to handle system maintenance and monitoring. Organizations must weigh control benefits against increased operational complexity.

Cost Efficiency and Scalability

Lakehouse architecture separates storage and compute resources to optimize costs. Organizations store large amounts of data in low-cost object storage while scaling compute power only when needed.

Operational efficiency improves through automated resource management. Systems can scale up during peak processing times and scale down during idle periods. This elastic scaling prevents over-provisioning of expensive compute resources.

Storage costs remain predictable since data stays in affordable cloud storage formats like Parquet and Delta Lake. These formats also compress data effectively, reducing overall storage requirements.

Compute optimization strategies include using spot instances for non-critical workloads. Organizations can achieve 60-90% cost savings on batch processing jobs. Resource scheduling tools help distribute workloads during off-peak hours when compute costs are lower.

Data Governance and Security

Data and AI governance frameworks establish clear policies for data access and usage across the organization. These frameworks define who can access specific datasets and how data flows through different systems.

Access control mechanisms use role-based permissions to restrict data access. Fine-grained controls allow different user groups to access only relevant data subsets. Integration with identity providers like Active Directory streamlines user management.

Data lineage tracking shows how data moves and transforms throughout the lakehouse. This visibility helps teams understand data sources and maintain compliance with regulations. Automated cataloging tools discover and classify sensitive data automatically.

Encryption protects data both at rest and in transit. Most cloud providers offer built-in encryption services that integrate seamlessly with lakehouse platforms. Regular security audits ensure compliance with industry standards and regulations.

Emerging Trends and Future Outlook for Lakehouse Architecture

Lakehouse architecture continues to evolve rapidly with AI integration becoming central to data strategies. Organizations are building unified platforms that support both traditional analytics and machine learning workloads on shared infrastructure.

Integration with AI Workloads

AI workloads are driving the next phase of lakehouse evolution. Modern lakehouses now support real-time model training and inference directly on stored data without complex data movement.

Organizations use lakehouses to build AI-ready data infrastructure. This includes automated feature stores that prepare data for machine learning models. The architecture supports both batch and streaming AI workloads.

Key AI capabilities include:

- Real-time fraud detection systems

- Predictive analytics for inventory management

- Customer behavior analysis using machine learning

- IoT data processing for smart devices

Data engineers focus more on platform engineering than traditional pipeline building. They design systems that let software engineers access data for AI applications easily.

Machine learning models can train directly on lakehouse data. This removes the need to copy data between systems for different AI workloads.

Evolution of Data Architectures

Data architectures are moving toward composable systems that offer flexibility for future growth. Organizations build modular components that work together instead of monolithic platforms.

The industry consolidates around fewer table formats. Iceberg and Delta Lake lead adoption across most query engines. This standardization reduces complexity for organizations.

Cloud platforms show different maturity levels:

| Platform | Maturity Level | Key Features |

|---|---|---|

| Databricks | High | Native Delta Lake support |

| AWS | Growing | Iceberg adoption increasing |

| Azure Fabric | High | Integrated lakehouse experience |

| Snowflake | Moderate | S3 with Iceberg integration |

Catalogs are becoming more important for data management. Technical and business catalogs merge to provide unified views of data assets across organizations.

Unified Data and AI Platforms

Companies build data intelligence platforms that combine storage, processing, and AI capabilities. These platforms handle structured and unstructured data in single systems.

Shared storage principles let multiple tools analyze the same data. Organizations transform data once and use it across different applications. This approach cuts costs and simplifies management.

Platform benefits include:

- Single source of truth for all data

- Reduced data duplication costs

- Faster time to insights

- Support for diverse workloads

Future platforms will include automated data discovery and governance. AI will help optimize data management processes without manual intervention.

The convergence of analytics and AI workloads continues. Organizations need platforms that support both traditional business intelligence and advanced machine learning use cases seamlessly.

Frequently Asked Questions

Data lakehouse architecture raises specific questions about its implementation, tools, and performance compared to traditional systems. These questions cover architectural differences, real-world applications, vendor-specific solutions, and practical deployment scenarios.

How does Data Lakehouse architecture differ from traditional Data Warehouse architecture?

Data warehouses store only structured and semi-structured data in proprietary systems. They focus on SQL-based analytics and business intelligence queries.

Lakehouse architecture builds on top of existing data lakes. It adds traditional data warehousing capabilities like ACID transactions and fine-grained security to low-cost storage systems.

Data warehouses require moving data between systems for different use cases. Organizations typically keep most data in lakes and move subsets to warehouses for fast SQL queries.

Lakehouses store all data types in one system. They support structured tables, images, videos, audio files, and text documents without data movement.

Traditional warehouses have limited machine learning support. They cannot run popular Python libraries like TensorFlow or PyTorch without exporting data first.

Lakehouses provide direct access to data using open APIs. Data scientists can use ML frameworks directly on the stored data without conversion steps.

Can you illustrate the components of a Lakehouse architecture with a diagram?

A lakehouse architecture contains three main layers: storage, metadata, and compute. The storage layer uses cloud object storage like AWS S3 or Azure Data Lake Storage.

The metadata layer manages data organization and governance. It handles schema enforcement, ACID transactions, and access control across all stored files.

The compute layer provides processing engines for different workloads. It includes SQL engines for business intelligence and machine learning frameworks for AI applications.

Open source technologies connect these layers together. Delta Lake, Apache Hudi, and Apache Iceberg provide table formats that enable warehouse features on lake storage.

What are some real-world examples of Data Lakehouse implementations?

Netflix uses lakehouse architecture to manage streaming data and content recommendations. They store viewing patterns, content metadata, and user preferences in unified storage systems.

Airbnb implements lakehouses for host and guest analytics. Their system processes booking data, property information, and user behavior data for business insights.

Major retail companies use lakehouses for customer analytics. They combine transaction data, inventory systems, and social media information for personalized marketing.

Financial services firms deploy lakehouses for risk management. They analyze transaction patterns, market data, and regulatory reports in single platforms.

How is Databricks Lakehouse architecture unique, and what benefits does it offer?

Databricks coined the term “data lakehouse” and built their platform around this concept. Their architecture implements warehouse features directly on top of data lake storage.

The Databricks platform uses Delta Lake as its core technology. Delta Lake adds ACID transactions, schema enforcement, and time travel capabilities to data lakes.

Their unified analytics workspace combines data engineering, data science, and machine learning. Teams can collaborate on the same platform without moving data between tools.

Databricks provides automatic optimization features. These include file compaction, data skipping, and caching to improve query performance on lake storage.

What are the key tools used in setting up a Data Lakehouse environment?

Open source table formats form the foundation of lakehouse systems. Delta Lake, Apache Hudi, and Apache Iceberg provide transaction capabilities on object storage.

Cloud storage services provide the underlying data layer. AWS S3, Azure Data Lake Storage, and Google Cloud Storage offer scalable, low-cost storage options.

Query engines enable SQL analytics on lakehouse data. Apache Spark, Presto, and Trino can process data stored in open table formats.

Catalog systems manage metadata and data discovery. Apache Hive Metastore, AWS Glue Catalog, and Unity Catalog track schema and table information.

Orchestration tools coordinate data pipelines. Apache Airflow, Prefect, and cloud-native schedulers manage ETL and data processing workflows.

What are the practical use-cases where a Lakehouse architecture outperforms other data management solutions?

Real-time analytics benefit from lakehouse streaming capabilities. Organizations can process live data streams while maintaining historical data in the same system.

Machine learning workflows perform better with direct data access. Data scientists avoid ETL processes and work with raw data in its original formats.

Multi-modal analytics require diverse data types. Lakehouses handle structured sales data alongside unstructured customer feedback and product images.

Cost optimization drives lakehouse adoption for large datasets. Organizations reduce storage costs while maintaining query performance for business intelligence.

Data governance improves with unified platforms. Teams apply consistent security policies and access controls across all enterprise data assets.

Cross-functional analytics benefit from single data copies. Marketing, sales, and product teams access the same datasets without data duplication or synchronization issues.