Every database designer faces a critical choice that affects how their system performs and maintains data quality over time. Database normalization and denormalization represent two opposing strategies that shape how information gets stored and retrieved from databases. Understanding when to organize data into separate, structured tables versus combining information for faster access determines whether a database system will excel at maintaining accuracy or delivering speed.

Normalization reduces redundancy by organizing data into separate tables, while denormalization takes the opposite approach by combining related information to speed up queries. Database professionals must understand both techniques because each serves different purposes depending on whether the system prioritizes data integrity or query performance. The choice between these approaches affects everything from storage requirements to system maintenance complexity.

Modern database systems require careful consideration of these design principles because they impact daily operations and long-term scalability. Organizations often use both approaches within the same system, applying normalization to transaction-heavy areas where data accuracy matters most and denormalization to reporting sections where fast data retrieval takes priority. This strategic balance helps create database systems that perform well while maintaining reliable, consistent information.

Key Takeaways

- Normalization organizes data into separate tables to reduce duplication and improve data accuracy

- Denormalization combines tables to speed up queries by accepting some data redundancy

- The best database designs often use both techniques strategically depending on specific system needs

Core Principles of Database Normalization

Database normalization involves organizing data into separate tables to eliminate redundancy and prevent data inconsistencies. This process follows specific rules to create efficient database structures that maintain data integrity.

What Is Normalization?

Normalization is a database design technique that organizes tables to reduce redundancy and dependency of data. Database designers use this method to structure information efficiently.

The process breaks down large tables into smaller, related tables. Each table focuses on a specific entity or concept. Relationships between tables connect related information.

Data normalization organizes data attributes within a database model to increase organization. The method creates logical connections between different pieces of information.

Database management systems benefit from normalized structures. These systems can process queries more efficiently when data follows proper organization rules.

Normal forms represent different levels of normalization:

- First Normal Form (1NF): Eliminates repeating groups

- Second Normal Form (2NF): Removes partial dependencies

- Third Normal Form (3NF): Eliminates transitive dependencies

Goals of Normalization in Databases

Normalization reduces redundancy by organizing data into separate tables with clear relationships. This organization prevents the same information from appearing in multiple places.

Data integrity improves when databases follow normalization principles. Each piece of information exists in only one location. Updates affect the entire system consistently.

Storage space decreases as redundant data disappears. Smaller databases require less disk space and memory. Performance often improves with more efficient storage.

Consistency becomes easier to maintain across the entire database. Changes to one record automatically reflect throughout the system. Users see the same information regardless of how they access it.

Database maintenance becomes simpler with normalized structures. Developers can modify table structures without affecting unrelated data. Backup and recovery processes work more efficiently.

Data Redundancy and Update Anomalies

Data redundancy occurs when the same information appears in multiple database locations. This duplication wastes storage space and creates maintenance problems.

Normalization prevents anomalies during inserts, updates, and deletions. These problems arise when databases store duplicate information incorrectly.

Update anomalies happen when changing one record requires multiple updates. Without normalization, users must remember to update every copy of the same data. Missing updates create inconsistent information.

Insert anomalies prevent adding new records without unnecessary data. Poorly designed tables may require complete information before accepting any new entries.

Delete anomalies remove important information unintentionally. Deleting one record might eliminate the only copy of valuable data stored elsewhere in the same row.

Normalized databases solve these problems by storing each fact only once. Changes affect one location instead of multiple copies throughout the system.

Normal Forms Explained: 1NF to 5NF and Beyond

Database normalization follows a series of rules called normal forms that progressively reduce data redundancy and improve data integrity. Each form builds upon the previous one, with stricter requirements for organizing data into well-structured tables.

First Normal Form (1NF): Atomicity and Uniqueness

First Normal Form establishes basic requirements for organized data storage. Tables must contain only atomic values, meaning each cell holds a single, indivisible piece of information.

The rules for 1NF include:

- Single-valued attributes: Each column contains only one value per row

- Same domain values: All values in a column must be of the same data type

- Unique column names: No duplicate column headers

- Order independence: Row order should not affect data meaning

Consider an employee table with a skills column containing “Python, JavaScript, HTML”. This violates 1NF because one cell contains multiple values.

To fix this, create separate rows for each skill or move skills to a dedicated table. The corrected approach uses an Employee_Skills table with foreign keys linking back to the main employee record.

This elimination of repeating groups prevents data inconsistencies and makes queries more predictable.

Second Normal Form (2NF): Eliminating Partial Dependencies

Second Normal Form requires tables to meet 1NF requirements and eliminate partial dependencies. A partial dependency occurs when non-key columns depend on only part of a composite primary key.

Tables with single-column primary keys automatically satisfy 2NF. Problems arise with composite keys made of multiple columns.

Example: A student grades table uses student_id + subject_id as the primary key. If teacher_name depends only on subject_id, this creates a partial dependency.

Before 2NF:

| student_id | subject_id | grade | teacher_name |

|---|---|---|---|

| 101 | MATH | A | Dr. Smith |

| 101 | ENG | B | Ms. Jones |

After 2NF:

Move teacher_name to the subjects table since it depends only on subject_id, not the full composite key.

This systematic approach eliminates redundancy and prevents update anomalies when teacher assignments change.

Third Normal Form (3NF): Removing Transitive Dependencies

Third Normal Form eliminates transitive dependencies while maintaining 1NF and 2NF compliance. A transitive dependency exists when a non-key column depends on another non-key column rather than the primary key.

In a student table, if student_id is the primary key and department_head depends on department_name (which depends on student_id), this creates a transitive dependency.

Identifying transitive dependencies:

- Column A depends on primary key

- Column B depends on Column A

- Column B transitively depends on primary key through Column A

Solution approach:

Create separate tables for each entity. Move department information to a departments table and reference it through a foreign key.

This prevents data anomalies when department heads change. Instead of updating multiple student records, only the departments table needs modification.

Third Normal Form ensures data dependencies make logical sense by keeping related information together while separating distinct entities.

Boyce-Codd Normal Form (BCNF) and Higher Forms

Boyce-Codd Normal Form represents a stricter version of 3NF. BCNF requires that every determinant (a column that determines other columns) must be a candidate key.

BCNF addresses edge cases where 3NF allows certain anomalies. Tables in BCNF eliminate all redundancy based on functional dependencies.

Fourth Normal Form (4NF) removes multi-valued dependencies. This occurs when two or more independent multi-valued facts about an entity exist in the same table.

Fifth Normal Form (5NF) eliminates join dependencies. Tables in 5NF cannot be decomposed into smaller tables without losing information when rejoined.

Higher normal forms apply to specialized scenarios. Most practical database designs achieve adequate normalization at 3NF or BCNF levels.

The choice between normal forms depends on specific requirements. Higher normalization reduces redundancy but may impact query performance through increased table joins.

Database Denormalization: Concepts and Strategies

Denormalization involves deliberately introducing data redundancy to improve query performance in specific database scenarios. This strategy prioritizes speed over storage efficiency by combining related tables and duplicating information where it provides measurable performance benefits.

What Is Denormalization?

Denormalization is the process of taking a normalized schema and making it non-normalized to enhance system performance for time-critical operations. Database designers use this technique to optimize read-heavy applications.

The process involves combining separate tables into fewer, larger tables. This reduces the number of joins required during queries. Data that was previously stored in multiple related tables gets duplicated across different locations.

Common denormalization techniques include:

- Table merging – Combining related tables into single structures

- Column duplication – Copying frequently accessed data across tables

- Calculated fields – Storing pre-computed values instead of calculating them during queries

- Materialized views – Creating physical copies of complex query results

Unlike normalization, denormalization accepts data redundancy as a trade-off. The goal is faster data retrieval at the expense of additional storage space and potential update complexity.

Why and When to Apply Denormalization

Organizations apply denormalization when query performance becomes more critical than storage efficiency. Read-heavy applications benefit most from this approach since complex joins can significantly slow down data retrieval.

Primary reasons for denormalization:

| Reason | Benefit |

|---|---|

| Faster queries | Eliminates complex joins between multiple tables |

| Simplified reporting | Reduces query complexity for analytics |

| Better user experience | Decreases response times for data-heavy operations |

Data warehouses and analytical systems frequently use denormalized structures. These environments prioritize fast data access over frequent updates. Analytics and reporting systems often prefer denormalized structures to optimize query performance.

Ideal scenarios for denormalization:

- High-volume read operations with minimal writes

- Complex reporting requirements spanning multiple tables

- Performance-critical applications with strict response time requirements

- Data warehouse and business intelligence systems

Organizations should avoid denormalization in transaction-heavy systems where data integrity is paramount.

Balancing Data Redundancy and Performance

Normalization and denormalization are opposing strategies that require careful consideration of trade-offs. Database architects must evaluate storage costs against performance gains when implementing denormalization.

Data redundancy increases storage requirements and creates potential consistency issues. When the same information exists in multiple locations, updates must occur across all copies. This coordination adds complexity to data maintenance procedures.

Key considerations for balance:

- Storage costs – Additional space requirements for duplicated data

- Update complexity – Maintaining consistency across redundant information

- Query frequency – How often data gets accessed versus modified

- Performance requirements – Acceptable response times for critical operations

Many organizations adopt hybrid approaches that combine both strategies. They maintain normalized structures for transactional data while creating denormalized views for reporting purposes.

The decision depends on specific application requirements. Systems with frequent updates benefit from normalization, while read-intensive applications often perform better with selective denormalization.

Normalization vs Denormalization in Database Design

Database normalization and denormalization represent two contrasting approaches to organizing data, each with distinct impacts on performance and data integrity. The choice between these methods depends on specific requirements for data consistency, query speed, and system complexity.

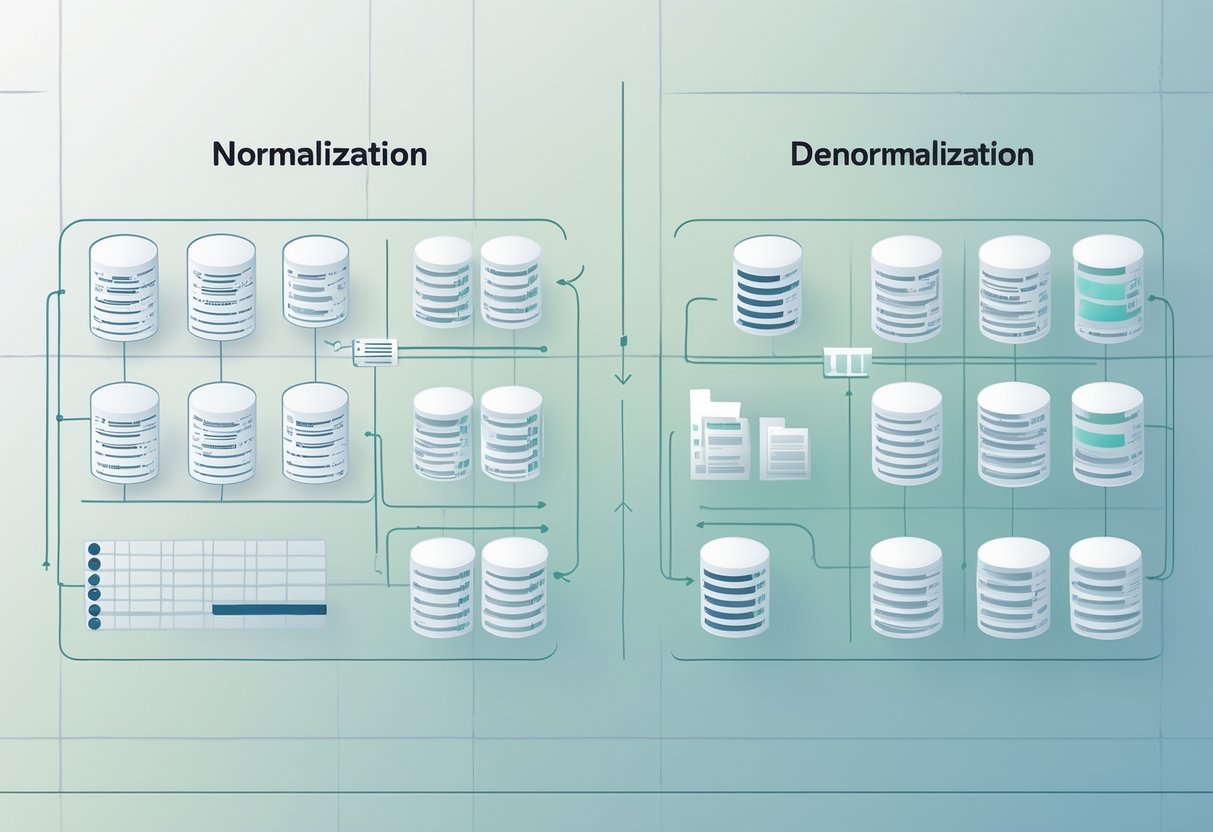

Comparing the Approaches

Normalization focuses on eliminating data redundancy by splitting information into separate, related tables. This approach follows specific rules called normal forms (1NF, 2NF, 3NF, BCNF) to structure data efficiently.

Each piece of information appears only once in the database. Updates require changes in just one location, reducing errors and inconsistencies.

Denormalization intentionally introduces redundancy to improve query performance. Data from multiple normalized tables gets combined into fewer, larger tables.

This approach reduces the number of joins needed for queries. Read operations become faster, but update operations become more complex.

| Aspect | Normalization | Denormalization |

|---|---|---|

| Data Redundancy | Minimal | Intentional |

| Storage Space | Efficient | Higher usage |

| Query Complexity | More joins required | Fewer joins needed |

| Update Operations | Simple | Complex |

| Read Performance | Slower | Faster |

Impact on Data Models and Integrity

Normalization creates data models with multiple interconnected tables linked by foreign keys. This structure maintains strong data integrity by preventing insertion, update, and deletion anomalies.

Changes to customer information occur in one table only. Order records reference customer data through relationships rather than duplicating it.

Normalized databases excel at maintaining data consistency in transactional systems. Financial applications and inventory management systems benefit from this approach.

Denormalization creates flatter data models with fewer relationships between tables. Some data integrity gets sacrificed for performance gains.

Customer names might appear in multiple tables. Updates require careful coordination to maintain consistency across all locations.

Data integrity risks include:

- Update anomalies – Same data stored in multiple places may become inconsistent

- Insertion complications – Adding new records may require data in multiple tables

- Deletion problems – Removing records might cause unintended data loss

Choosing the Right Method for Your Database

Choose normalization when:

- Data accuracy is critical (banking, healthcare, legal systems)

- The system handles many write operations

- Storage space is limited

- Data consistency requirements are strict

Choose denormalization when:

- Read performance is the primary concern

- The system handles analytical queries and reporting

- Data warehouse or business intelligence applications are involved

- Query response time is more important than storage efficiency (see authoritative discussion)

Hybrid approaches combine both methods strategically. Critical transactional data remains normalized while reporting tables use denormalization for faster analytics.

E-commerce databases often normalize order and payment data for integrity. Product catalogs and customer reviews may be denormalized for quick display on web pages.

The decision requires analyzing specific use cases, performance requirements, and data consistency needs. Most real-world databases use elements of both approaches to balance performance with data integrity.

Modern Applications of Normalization and Denormalization

Today’s data systems use both normalized and denormalized approaches based on specific needs. AI systems often require denormalized data for fast processing, while traditional database management systems still rely heavily on normalization for data integrity.

Role in Data Analytics and AI

AI and machine learning systems work best with denormalized data structures. These systems need quick access to large amounts of related information without waiting for complex joins across multiple tables.

Data scientists often create flat, denormalized tables for training machine learning models. This approach eliminates the need for expensive join operations during model training and inference.

Popular AI applications using denormalized data:

- Recommendation engines

- Natural language processing models

- Computer vision systems

- Real-time fraud detection

Analytics platforms like Spark and Hadoop work more efficiently with denormalized datasets. These systems can process wide tables faster than joining normalized data across distributed clusters.

However, some AI workflows still benefit from normalized data. Data preprocessing pipelines often use normalized structures to maintain data quality and reduce storage costs before creating denormalized training sets.

Relevance in Modern DBMS and Data Warehousing

Modern database management systems support both approaches through different storage engines and optimization techniques. Cloud databases automatically choose between normalized and denormalized strategies based on query patterns.

Data warehouses typically use a hybrid approach called dimensional modeling. This method combines normalized dimension tables with denormalized fact tables for optimal query performance.

Common DBMS strategies:

| System Type | Normalization Level | Primary Use Case |

|---|---|---|

| OLTP Systems | Highly Normalized | Transaction Processing |

| Data Warehouses | Mixed Approach | Analytics Queries |

| NoSQL Databases | Denormalized | High-Speed Reads |

Modern column-store databases like Snowflake handle normalized data more efficiently than traditional row-based systems. These systems can perform joins quickly while maintaining the benefits of normalized design.

Real-time analytics systems often use denormalized structures for speed. Stream processing platforms need immediate access to all relevant data without database lookups.

Practical Examples and Best Practices

E-commerce platforms demonstrate both approaches in action. Product catalogs use normalized tables for inventory management but create denormalized views for search and recommendation features.

Social media platforms store user profiles in normalized tables but build denormalized feeds for fast content delivery. This dual approach balances data consistency with read performance.

Best practices for modern applications:

- Use normalized structures for write-heavy operations

- Create denormalized views for read-heavy analytics

- Implement data pipelines to sync between both formats

- Monitor query performance to guide design decisions

Financial systems maintain normalized transaction records for compliance but generate denormalized reports for analysis. This approach ensures audit trails while enabling fast business intelligence queries.

Gaming applications often denormalize player data for real-time leaderboards and matchmaking. The data optimization process requires careful balance between update frequency and read performance.

Microservices architectures may use different normalization strategies per service. Each service optimizes its data structure for specific use cases while maintaining overall system performance.

Frequently Asked Questions

Database designers face common questions about when to apply different normal forms and how these choices affect system performance. The answers depend on specific use cases, data relationships, and whether read or write operations take priority.

What are the different types of normalization in database design?

Database normalization includes several normal forms that build on each other with increasing restrictions. Each form addresses specific types of data problems.

First Normal Form (1NF) requires atomic values in each column. No cell can contain lists or multiple values separated by commas.

Second Normal Form (2NF) eliminates partial dependencies. All non-key columns must depend on the entire primary key, not just part of it.

Third Normal Form (3NF) removes transitive dependencies. Non-key columns cannot depend on other non-key columns.

Boyce-Codd Normal Form (BCNF) handles special cases that 3NF misses. It’s a stricter version of 3NF.

Fourth Normal Form (4NF) and Fifth Normal Form (5NF) address complex multi-valued and join dependencies. Most databases don’t need these advanced forms.

For an in-depth overview, see Database Normalization Basics (PostgreSQL Documentation).

How do the first three normal forms (1NF, 2NF, and 3NF) improve database structure?

1NF creates atomic data by splitting multi-value fields into separate rows or tables. A phone number field containing “555-1234, 555-5678” would become two separate entries.

2NF prevents partial key dependencies in tables with composite primary keys. If a table uses OrderID and ProductID as keys, product details shouldn’t depend only on ProductID.

3NF eliminates indirect relationships between non-key columns. Customer state shouldn’t depend on customer city within the same table – this data belongs in a separate location table.

These forms reduce storage waste and prevent update problems. Changes to repeated data need updates in only one location.

Can you provide examples of data normalization in a practical database scenario?

An e-commerce system shows clear normalization benefits in practice. The unnormalized version might store everything in one large table.

Before normalization: A single Orders table contains customer name, address, product details, and order information. Each row repeats customer and product data.

After normalization: The system splits into four tables – Customers, Products, Orders, and OrderDetails. Customer information appears once in the Customers table.

Product details live in the Products table. The Orders table links to CustomerID, while OrderDetails connects OrderID to ProductID with quantities.

This structure eliminates duplicate customer and product information. Updates to a customer’s address happen in one place instead of multiple order records.

Why might a database designer choose to denormalize data, and what are some examples?

Denormalization improves read performance by reducing the number of table joins needed for common queries. This trade-off makes sense when fast data retrieval matters more than storage efficiency.

Reporting systems often use denormalized tables. A sales summary table might store customer name, total orders, and last purchase date together instead of joining multiple tables.

High-traffic websites benefit from denormalized product catalogs. Storing category names with products avoids joins during page loads.

Analytics platforms use denormalized data warehouses. These systems copy and combine data from normalized operational databases for faster analysis.

The main reasons include faster queries, simpler SQL statements, and better performance for read-heavy applications.

What is the impact of normalization and denormalization on database performance and query efficiency?

Normalized databases excel at write operations because each piece of data exists in one location. Updates, inserts, and deletes happen quickly without maintaining multiple copies.

However, complex queries require multiple joins to combine related data. These joins can slow down read operations, especially with large datasets.

Denormalized databases speed up reads by storing related data together. Queries access fewer tables and perform fewer joins.

Write operations become more complex in denormalized systems. Updates might need changes in several places to maintain consistency.

The performance impact depends on the application’s read-to-write ratio. Transaction systems favor normalization, while reporting systems often use denormalization.

Ready to practice your skills? Try our database normalization exercises or test your knowledge with database quizzes. For advanced learners, explore premium database projects to apply normalization and denormalization concepts in real-world scenarios.

How does denormalization differ from normalization in terms of handling data redundancy?

Normalization eliminates data redundancy by storing each fact only once. Related tables connect through foreign keys instead of repeating information.

This approach saves storage space and prevents inconsistent data. When a customer changes their address, the update happens in one table row.

Denormalization intentionally adds redundancy to improve query performance. The same data appears in multiple tables or columns for faster access.

Learn more about normalization and denormalization from the official Microsoft documentation.

The choice depends on whether consistency or speed takes priority. Normalized designs prioritize data integrity, while denormalized designs optimize for performance.