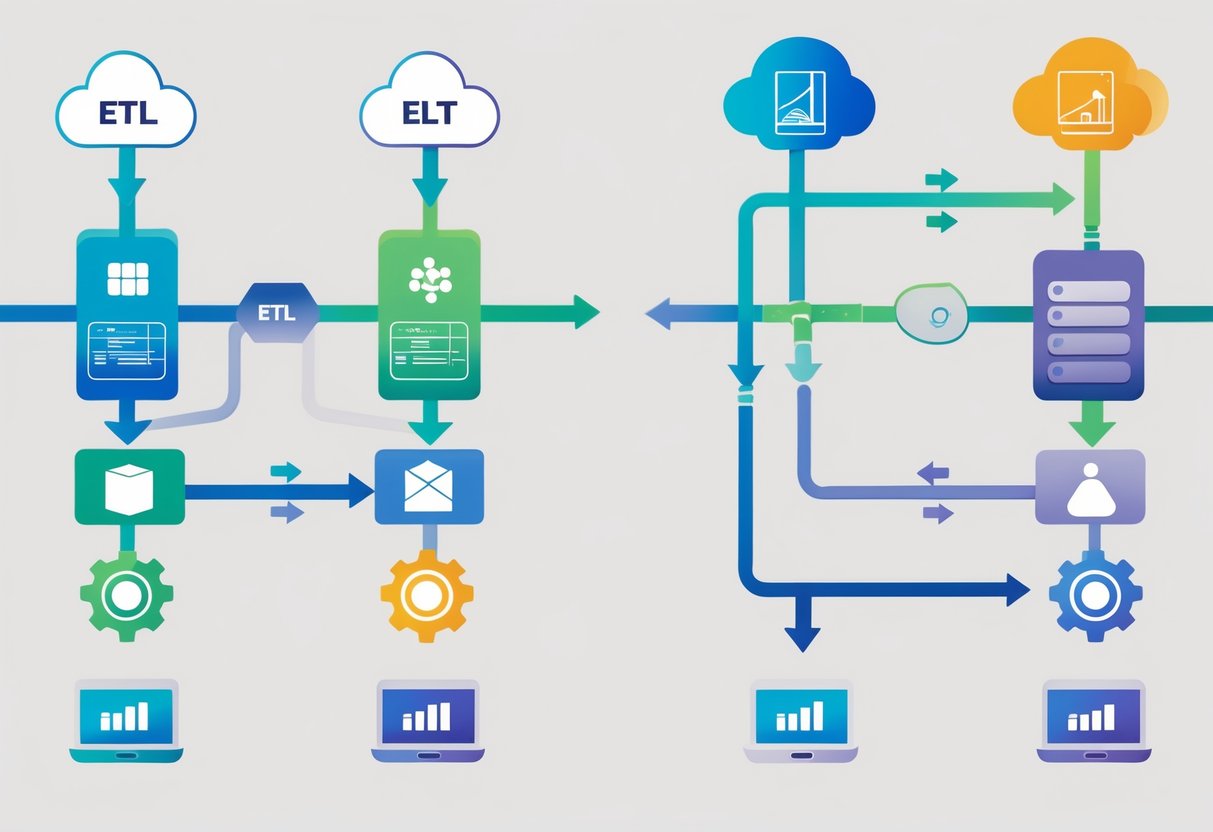

Data integration can feel overwhelming when choosing between two popular methods. ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are both data integration processes that move raw data from source systems to target databases, but they handle the transformation step at different stages of the pipeline. The main difference lies in when the data gets cleaned and organized during the process.

Understanding ETL vs ELT differences helps businesses make better decisions about their data workflows. ETL transforms data before loading it into the final destination, while ELT loads raw data first and transforms it afterward. This simple change in order creates significant differences in speed, data quality, and use cases.

Both approaches have specific advantages depending on data volume, processing power, and business needs. Organizations dealing with large amounts of real-time data often prefer ELT, while those requiring clean, structured data from the start typically choose ETL. The right choice depends on factors like data size, transformation complexity, and available computing resources.

Key Takeaways

- ETL transforms data before loading while ELT loads raw data first then transforms it later

- ELT works better for large data volumes and real-time processing while ETL delivers cleaner data upfront

- The best approach depends on your data size, transformation needs, and available computing power

Core Concepts: What Are ETL and ELT?

ETL and ELT are data integration processes that move raw data from source systems to target databases. The key difference lies in when data transformation happens during the data pipeline.

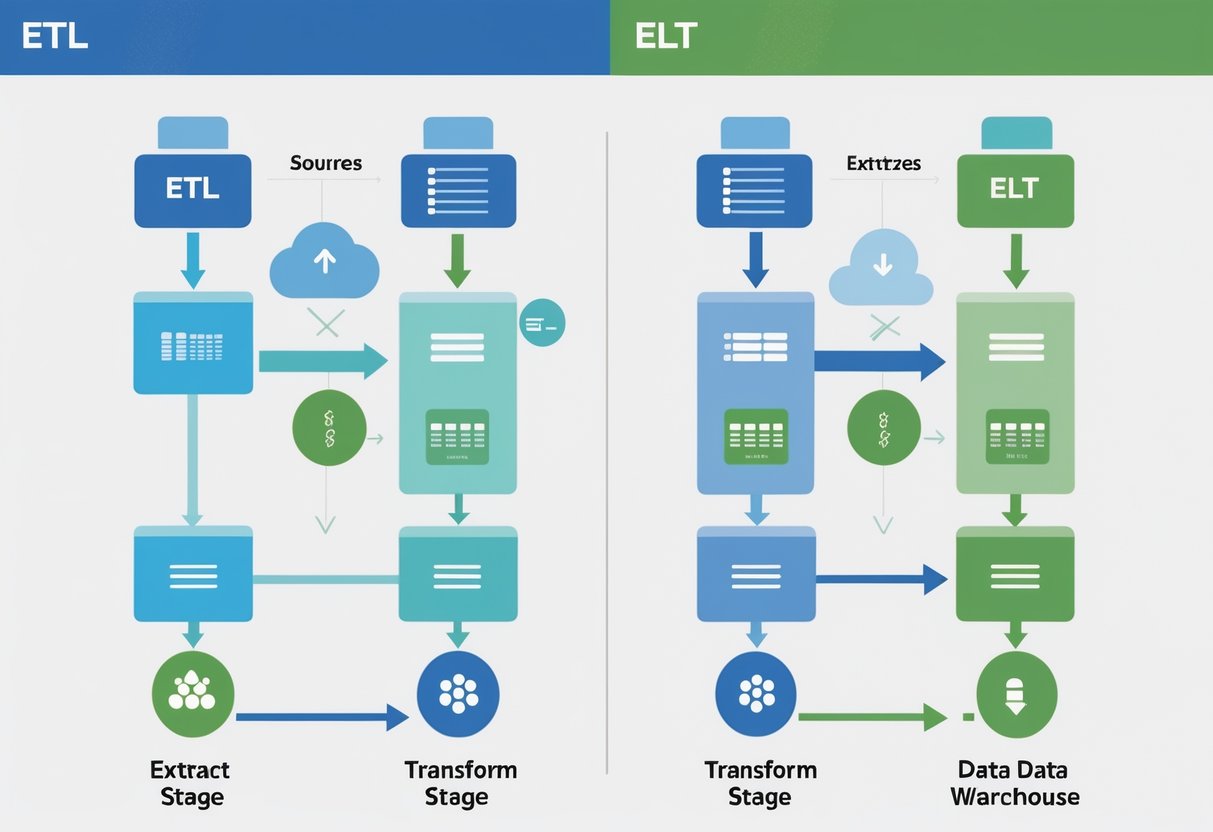

ETL Process Overview

ETL stands for Extract, Transform, Load. This process follows a specific order where data transformation occurs before loading into the target system.

Extract involves pulling data from various sources like databases, files, or APIs. The system collects raw data from multiple locations.

Transform happens next. The data gets cleaned, formatted, and changed to match business rules. This step removes errors and makes data consistent.

Load comes last. The transformed data moves into the final destination like a data warehouse.

ETL works well when organizations need clean, structured data before storage. The transformation step ensures data quality before it reaches the target system.

ELT Process Overview

ELT stands for Extract, Load, Transform. This approach loads raw data first, then transforms it within the target system.

Extract pulls data from source systems just like ETL. The collection process remains the same.

Load happens immediately after extraction. Raw data moves directly into the target database without changes.

Transform occurs last within the destination system. The target database handles all data processing and cleaning tasks.

ELT processes work best with modern cloud platforms that have strong computing power. The target system needs enough resources to handle transformation tasks.

The Role of Data Transformation

Data transformation changes raw data into a usable format. This process cleans, combines, and structures information for analysis.

Common transformation tasks include removing duplicates, fixing data types, and combining fields. Organizations also apply business rules during this step.

Timing matters for transformation. ETL transforms data before storage, while ELT transforms after loading.

The transformation location affects performance and flexibility. ETL uses separate processing power, while ELT relies on the target system’s resources.

Both approaches achieve the same goal of preparing data for business use. The choice depends on system capabilities and data requirements.

Step-by-Step Comparison: How ETL and ELT Work

The main difference between ETL and ELT approaches lies in when and where data transformation happens. ETL transforms data before loading it into the destination, while ELT loads raw data first and transforms it afterward.

Data Extraction Differences

Both ETL and ELT start with the same extraction process. They pull raw data from various data sources like databases, files, and applications.

ETL extraction focuses on collecting specific data fields that will be needed after transformation. The process often extracts smaller amounts of data because it only takes what will be used.

ELT extraction pulls larger volumes of raw data from data sources. It captures everything available because transformation decisions happen later in the process.

The extraction methods are similar for both approaches. They connect to databases, read files, and access APIs to gather information.

Data extraction timing can differ between the two methods. ETL often extracts data in scheduled batches. ELT can handle both batch and real-time extraction more easily.

Both processes must handle data from multiple sources. These include relational databases, cloud applications, and flat files.

Loading Approaches and Destinations

The loading phase shows the biggest difference between ETL and ELT data integration methods.

ETL loading puts cleaned and transformed data directly into the final destination. This destination is usually a data warehouse with structured tables and schemas.

ETL often uses a staging area during the process. Raw data sits temporarily in this staging area while transformation happens. The staging area acts as a workspace before final loading.

ELT loading moves raw data directly into the destination system first. This destination is typically a data lake or modern data warehouse that can store unstructured data.

Data lakes work well for ELT because they accept any type of raw data. Traditional data warehouses need structured data, which makes them better for ETL.

ELT loading happens faster because there is no transformation step slowing it down. The raw data moves quickly from data sources to the destination.

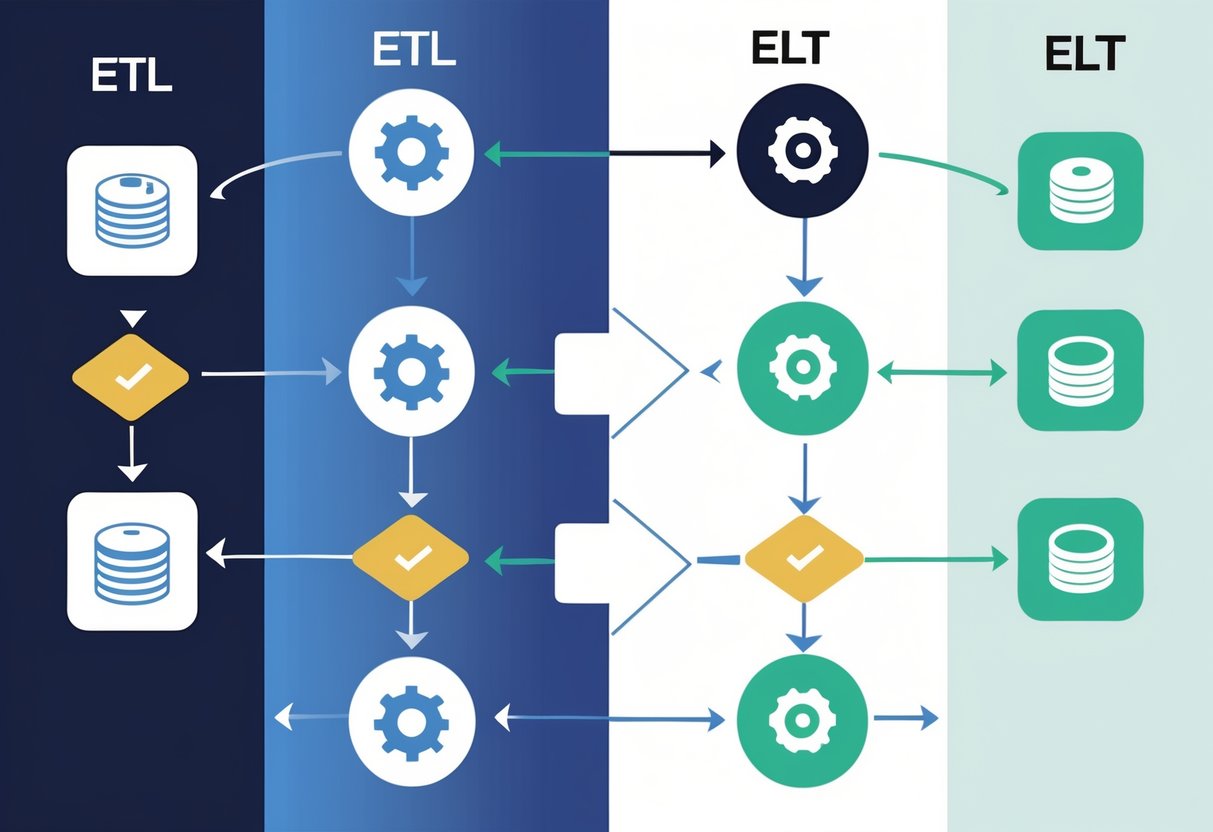

Transformation Timing and Methods

Transformation timing creates the core difference between these data processing approaches.

ETL transformation happens before loading data into the destination. External servers or staging areas handle the transformation work. This approach cleans and structures data before it reaches the data warehouse.

The transformation process in ETL includes data cleaning, formatting, and business rule application. All of this work completes before data loading begins.

ELT transformation occurs after raw data loads into the destination system. The data warehouse or data lake handles transformation using its own computing power.

ELT transformations can happen on-demand when users need specific data views. This flexibility allows for different transformation approaches for different use cases.

Modern data warehouses have strong processing capabilities that make ELT transformation efficient. They can transform large amounts of raw data quickly using distributed computing.

The timing difference affects how quickly data becomes available. ETL provides ready-to-use data but takes longer overall. ELT makes raw data available immediately but requires transformation time for analysis.

Key Differences Between ETL and ELT

ETL and ELT differ mainly in when data gets transformed, which affects how they handle different data types and perform at scale. The transformation timing also shapes what tools work best and how much computing power each approach needs.

Data Compatibility and Structure

ETL works best with structured data from traditional databases and systems. The transformation happens before loading, so data must fit into predefined formats and schemas.

This approach struggles with unstructured data like social media posts, images, or sensor readings. The data needs to be cleaned and organized before it can enter the target system.

ELT handles both structured and unstructured data more easily. Raw data gets loaded first into systems like cloud data warehouses, then transformed as needed.

Modern platforms like Snowflake, Redshift, and BigQuery support this flexible approach. They can store messy data and transform it later using SQL queries.

| Data Type | ETL Compatibility | ELT Compatibility |

|---|---|---|

| Structured | High | High |

| Semi-structured | Medium | High |

| Unstructured | Low | High |

Performance and Scalability

ETL performance depends on the processing power of the transformation server. As data volumes grow, this server can become a bottleneck that slows down the entire pipeline.

The transformation step happens before loading, which means all data must be processed sequentially. This creates delays when working with big data sets.

ELT leverages the power of modern cloud data warehouses for transformation. These systems can scale up automatically to handle larger workloads without manual intervention.

Tools like Fivetran extract and load data quickly, while dbt handles transformations using the warehouse’s computing power. This separation allows each step to scale independently.

Cloud resources make ELT more cost-effective for large datasets. Organizations only pay for the computing power they use during transformation, rather than maintaining dedicated servers.

Architecture and Tooling

ETL requires a separate transformation layer between source and target systems. This creates a more complex architecture with multiple moving parts that need maintenance.

Traditional ETL tools often require specialized skills and custom coding. Changes to data sources or business rules mean updating transformation logic in multiple places.

ELT uses simpler architecture with fewer components. Data flows directly from sources to the target warehouse, where transformations happen using familiar SQL commands.

Modern ELT stacks combine tools like Fivetran for data extraction and loading with dbt for transformation. This approach uses version control and testing practices from software development.

Cloud data warehouses like BigQuery and Redshift provide built-in transformation capabilities. Data teams can use standard SQL instead of learning proprietary ETL tools and interfaces.

Benefits and Limitations of Each Approach

ETL pipelines excel at delivering clean, validated data that meets strict regulatory requirements, while ELT workflows offer superior flexibility and speed for modern analytics needs. Each approach faces distinct challenges around cost, complexity, and data management requirements.

Strengths of ETL Pipelines

ETL delivers exceptional data quality by cleaning and validating information before it reaches the target system. This process removes duplicates, fixes errors, and ensures consistency across all datasets.

Organizations in regulated industries benefit from ETL’s built-in compliance features. The approach makes it easier to implement data privacy regulations like GDPR and HIPAA by applying data masking and filtering before loading.

Structured data processing represents another key strength. ETL handles traditional database formats efficiently and works well with existing enterprise systems.

The method provides predictable performance for batch processing scenarios. Companies can schedule regular data updates without impacting operational systems during business hours.

ETL creates detailed audit trails that support compliance reporting. This feature helps organizations track data lineage and demonstrate regulatory adherence.

Advantages of ELT Workflows

ELT enables near real-time analytics by loading data immediately into target systems. This speed advantage supports faster business decision-making and responsive data strategies.

The approach handles massive data volumes more effectively than traditional methods. Cloud-native platforms can automatically scale processing power to match data volume requirements.

Flexibility stands out as ELT’s primary benefit. Data teams can apply multiple transformation logic sets to the same raw data without re-extracting information from source systems.

ELT supports diverse data types including structured, semi-structured, and unstructured formats. This capability enables comprehensive analytics across different data sources.

Lower infrastructure costs result from consolidating storage and processing in a single system. Organizations avoid maintaining separate staging environments and transformation servers.

The method accommodates iterative data modeling approaches that support modern analytics workflows and machine learning applications.

Potential Drawbacks and Challenges

ETL faces scalability limitations when handling large data volumes or complex transformation requirements. The staging process can become a bottleneck for high-velocity data streams.

Infrastructure overhead creates ongoing maintenance challenges. Organizations must manage separate systems for extraction, transformation, and loading processes.

ELT introduces data privacy risks by loading raw information before applying security controls. Sensitive data remains exposed until transformation rules implement proper masking or filtering.

Cloud computing costs can escalate quickly with ELT approaches. Heavy transformation workloads consume significant processing resources and drive up operational expenses.

Skills requirements differ significantly between approaches. ETL demands expertise in traditional integration tools, while ELT requires advanced SQL knowledge and cloud platform experience.

Data quality control becomes more complex with ELT workflows. Teams must implement validation rules within target systems rather than during preprocessing stages.

Choosing the Right Approach: Best Practices and Use Cases

The choice between ETL and ELT depends on your existing infrastructure, data volume, and business requirements. Modern cloud environments favor ELT for scalability, while legacy systems often work better with traditional ETL approaches.

Legacy Systems vs Cloud-Native Environments

Legacy systems with limited processing power work best with ETL approaches. These older environments include traditional ERP and CRM systems that cannot handle large-scale data transformations.

ETL reduces the load on these systems by processing data externally. The transformed data arrives ready to use without taxing legacy hardware.

Cloud-native environments excel with ELT implementations. Modern cloud platforms offer unlimited storage and processing power for high-volume, rapidly arriving data.

Companies can load raw data quickly into cloud storage. They transform it later using powerful cloud computing resources when needed.

Key considerations for legacy systems:

- Limited processing capacity

- Structured data requirements

- Existing data architecture constraints

Key considerations for cloud platforms:

- Scalable storage options

- Flexible processing power

- Cost-effective for large datasets

Supporting Analytics and Machine Learning

Machine learning projects require different data processing approaches than traditional reporting. ELT works better for machine learning because it preserves raw data for multiple uses.

Data scientists need access to unprocessed information. They experiment with different transformations and feature engineering approaches during model development.

Real-time analytics benefit from ELT when processing speeds matter most. Loading data first enables faster initial access while transformations run in parallel.

ETL suits traditional business intelligence better. Standard reports need consistent, pre-processed data that ETL delivers efficiently.

Machine learning requirements:

- Raw data preservation

- Flexible transformation options

- Iterative data exploration

Traditional analytics needs:

- Consistent data formats

- Pre-defined business rules

- Reliable reporting schedules

Industry-Specific Applications

Financial services often require ETL for regulatory compliance. Banks need data validation and cleansing before loading sensitive information into secure systems.

Healthcare organizations use ETL to ensure patient data meets strict privacy standards. Medical records require transformation to remove identifying information before analysis.

Retail companies favor ELT for customer behavior analysis. They process large volumes of transaction data and need flexibility to analyze purchasing patterns quickly.

Manufacturing firms choose based on their data architecture. Those with modern IoT sensors use ELT to handle continuous data streams from production equipment.

Industries favoring ETL:

- Banking and finance

- Healthcare and pharmaceuticals

- Government agencies

Industries favoring ELT:

- E-commerce and retail

- Technology companies

- Media and entertainment

Security, Compliance, and Data Governance Considerations

ETL processes handle sensitive data transformations before loading, while ELT systems must rely on destination platform security features. Data governance and compliance rules can be incorporated during ETL transformation phases, but ELT approaches face different challenges for maintaining proper controls.

Built-In Security Features

ETL systems provide dedicated security layers during the transformation process. Organizations can encrypt data, mask sensitive information, and apply security rules before data reaches its final destination.

The transformation stage allows teams to remove or hide personal data. Companies can strip credit card numbers, social security numbers, and other private details during processing.

ELT approaches depend entirely on the security features of the destination system. Data warehouses like Snowflake and BigQuery offer strong security, but organizations lose control during the initial data loading phase.

Raw data sits in the destination system before transformation occurs. This creates potential security gaps if the warehouse lacks proper protection or if unauthorized users gain access to untransformed data.

Access Control and Authentication

ETL provides precise control over data handling, facilitating data masking, access control, and compliance requirements during transformation. Teams can set up user permissions and authentication at multiple points in the pipeline.

Multifactor authentication works well with ETL systems. Organizations can require additional verification steps before users access transformation tools or processed data.

ETL platforms allow granular permission settings. Different team members can access specific datasets, transformation rules, or output destinations based on their roles.

ELT systems must use whatever access controls the destination platform provides. ELT must rely on governance features available in the destination platform, which may not offer the same level of granular control.

Cloud data warehouses typically offer role-based access controls. However, these controls apply after raw data has already been loaded into the system.

Compliance with Regulations

Data privacy regulations like GDPR and CCPA require specific handling of personal information. ETL systems excel at meeting these requirements because they can modify data before it reaches storage systems.

Organizations can implement data retention policies during ETL processing. They can automatically delete expired records or anonymize personal details based on regulatory timelines.

HIPAA compliance becomes easier with ETL approaches. Healthcare organizations can encrypt patient data, remove identifying information, and apply access restrictions during transformation.

ELT systems face compliance challenges because raw data loads first. Companies must ensure their data warehouses meet all regulatory requirements from the moment data arrives.

Audit trails work differently in each approach. ETL systems can log every transformation step, while ELT systems depend on the destination platform’s logging capabilities for compliance reporting.

Frequently Asked Questions

ETL transforms data before loading it into storage, while ELT loads raw data first and transforms it later. The choice between these approaches depends on factors like data volume, processing power, and real-time requirements.

What are the key differences between ETL and ELT processes?

The main difference lies in when transformation happens. ETL extracts data from sources, transforms it into the desired format, then loads it into the target system.

ELT reverses this order. It extracts data from sources, loads it directly into the target system, then transforms it there.

ETL requires a separate processing environment for transformations. This means data gets cleaned and formatted before reaching its final destination.

ELT uses the target system’s computing power for transformations. Raw data sits in the warehouse or data lake until transformation occurs.

Processing time differs significantly between methods. ETL processes involve longer initial setup but deliver clean data immediately.

ELT loads data faster initially but requires transformation time when users need formatted data.

Which is more efficient for modern data management: ETL or ELT?

ELT typically works better for modern data management needs. Cloud computing platforms provide powerful processing capabilities that make ELT more practical.

Large data volumes favor ELT approaches. Modern data warehouses can handle massive amounts of raw data and transform it quickly when needed.

ETL remains efficient for smaller datasets with consistent formats. Organizations with limited storage or strict data quality requirements often prefer ETL.

ELT offers better scalability for growing businesses. Adding new data sources requires less upfront processing work.

Cost considerations vary by organization. ELT reduces initial processing costs but may increase storage expenses.

How do ETL and ELT differ in handling real-time data integration?

ETL struggles with real-time data processing. The transformation step creates delays that prevent immediate data availability.

Traditional ETL systems process data in batches. This means users wait for scheduled processing windows to access updated information.

ELT handles real-time data more effectively. Raw data loads immediately into the target system without waiting for transformation.

Streaming data works better with ELT approaches. Users can access recent data quickly and apply transformations as needed.

Real-time analytics benefit from ELT methods because data becomes available faster. Businesses can make decisions based on current information.

ETL requires special tools for real-time processing. These solutions add complexity and cost to data pipelines.

Can ETL and ELT be used in conjunction, and if so, in what scenarios?

Organizations often use both methods together. Different data types and business needs require different approaches.

Critical business data might use ETL for quality control. Less critical data can follow ELT patterns for speed and flexibility.

Hybrid approaches work well for complex organizations. Some departments need clean, structured data while others prefer raw data access.

Legacy systems may require ETL integration. Newer cloud systems can handle ELT processes for modern data sources.

Combined ETL and ELT strategies help organizations transition gradually. Teams can migrate systems without disrupting current operations.

Data governance requirements influence the choice. Regulated industries might use ETL for compliance data and ELT for analytics.

What are the advantages and disadvantages of ETL versus ELT in data warehousing?

ETL provides clean, validated data upon arrival. Data quality controls happen before storage, ensuring consistency across the warehouse.

ETL disadvantages include slower processing times and higher upfront costs. Complex transformations require significant computing resources before loading.

ELT offers faster data loading and lower initial processing costs. Organizations can store more data types without immediate transformation decisions.

ELT disadvantages include potential data quality issues. Raw data requires validation during transformation, which may reveal problems later.

ETL works better for structured data warehouses with defined schemas. ELT suits data lakes and flexible storage systems.

Storage costs differ between approaches. ETL stores only processed data while ELT stores raw and transformed versions.

How do the tools and technologies differ for ETL and ELT implementations?

ETL tools focus on data transformation capabilities. Popular options include Informatica, Talend, and SSIS for complex data processing.

These tools provide visual interfaces for mapping data transformations. Users can design workflows without extensive coding knowledge.

ELT tools emphasize data loading and storage management. Cloud platforms like Snowflake, BigQuery, and Redshift excel at ELT processing.

ELT implementations rely heavily on SQL for transformations. Data engineers write queries to process data within the target system.

Programming languages differ between approaches. ETL often uses Java or Python for custom transformations.

ELT leverages database languages and cloud-native tools. Modern data platforms provide built-in transformation capabilities.

Infrastructure requirements vary significantly. ETL needs separate processing servers while ELT uses target system resources.