Modern data analytics requires real-time insights, but traditional batch processing creates delays that can cost businesses valuable opportunities. Change Data Capture (CDC) enables organizations to track and capture data modifications as they happen, creating efficient analytics pipelines that deliver fresh insights without overwhelming system resources. This approach transforms how companies handle data movement by focusing only on what has changed rather than processing entire datasets repeatedly.

CDC patterns solve critical challenges in analytics by capturing inserts, updates, and deletes from source systems and streaming them to analytics platforms. Organizations can maintain data consistency across multiple systems while reducing processing overhead and storage costs. The technology works behind the scenes to identify changes and convert them into events that power real-time dashboards, machine learning models, and business intelligence tools.

Understanding CDC patterns becomes essential as data volumes grow and business demands shift toward real-time decision making. Different CDC approaches work better for specific use cases, and choosing the right architecture determines whether analytics pipelines can scale effectively. The implementation involves selecting appropriate tools, designing robust data flows, and managing the complexities that come with real-time data processing.

Key Takeaways

- CDC captures only data changes rather than full datasets, reducing processing overhead and enabling real-time analytics

- Multiple CDC patterns exist with different architectures, tools, and integration approaches suited for various business needs

- Successful CDC implementation requires careful planning around data consistency, error handling, and system scalability challenges

Core Concepts of Change Data Capture for Analytics

Change data capture tracks database changes as they happen and feeds them into analytics systems. This approach enables real-time insights by capturing only modified data instead of processing entire datasets repeatedly.

What Is Change Data Capture and Why Use It?

Change data capture is a data integration method that identifies and tracks changes made to source databases. It captures inserts, updates, and deletes as they occur and delivers these changes to downstream systems.

Traditional batch processing moves entire datasets on scheduled intervals. This creates delays between when data changes and when analytics systems reflect those changes. CDC solves this problem by streaming only the modified records.

The main benefits include:

- Reduced system load – Only changed data moves through the pipeline

- Lower latency – Changes appear in analytics systems within seconds or minutes

- Better resource usage – Less network bandwidth and storage needed

- Real-time capabilities – Enables live dashboards and instant alerts

CDC works by monitoring database transaction logs, timestamps, or triggers. When the system detects a change, it captures the before and after values along with metadata like timestamps and table names.

CDC in Modern Analytics Pipelines

Modern analytics pipelines use CDC as the foundation for streaming data architectures. CDC acts as the event producer that feeds change events into message queues like Apache Kafka or cloud streaming services.

The typical flow starts with CDC capturing changes from operational databases. These changes get published to a streaming platform where multiple consumers can process them. Analytics tools, data warehouses, and machine learning systems all consume these change streams.

Key components include:

- Source databases with CDC capabilities

- Change capture tools (Debezium, Airbyte, Fivetran)

- Streaming platforms (Kafka, AWS Kinesis, Google Pub/Sub)

- Target analytics systems (Snowflake, BigQuery, data lakes)

This architecture enables event-driven analytics where downstream systems react to data changes automatically. For example, when a customer updates their profile, the change triggers updates across recommendation engines, personalization systems, and reporting dashboards.

The streaming approach also supports lambda architecture patterns where both real-time and batch processing work together for comprehensive analytics coverage.

Key Use Cases in Real-Time Analytics

Real-time fraud detection represents one of the most critical CDC applications. Financial institutions use CDC to stream transaction data instantly to fraud detection algorithms. When suspicious activity occurs, the system can block transactions within milliseconds.

E-commerce personalization relies heavily on CDC for customer behavior tracking. Product views, cart additions, and purchases get captured and fed into recommendation engines immediately. This enables dynamic product suggestions based on current shopping behavior.

Operational monitoring uses CDC to track system health and performance metrics. Database changes trigger alerts when error rates spike or performance degrades. IT teams receive notifications before customers experience problems.

Supply chain optimization leverages CDC for inventory management. When stock levels change, the updates flow to procurement systems, warehouse management, and customer-facing applications simultaneously.

Customer 360 analytics combines data from multiple touchpoints using CDC. Marketing, sales, and support interactions get synchronized in real-time to provide complete customer views for service representatives and automated systems.

Types and Patterns of Change Data Capture

Different CDC approaches offer unique trade-offs between performance, complexity, and data accuracy. Log-based methods provide the highest performance by reading database transaction logs, while trigger-based approaches offer precise control through database-level automation.

Trigger-Based CDC Approaches

Trigger-based CDC uses database triggers to capture data changes automatically when insert, update, or delete operations occur. Database triggers execute immediately when changes happen to monitored tables.

This approach creates audit tables or change logs that store the modified data along with metadata like timestamps and operation types. Triggers can capture both old and new values for updated records.

Advantages:

- Captures all change types without schema modifications

- Works with any database that supports triggers

- Provides immediate change detection

- Easy to implement for specific tables

Disadvantages:

- Adds overhead to database transactions

- Can slow down write operations

- Requires careful trigger management

- May impact database performance under heavy loads

Trigger-based CDC works best for systems with moderate write volumes where immediate change capture is more important than maximum throughput.

Log-Based CDC Approaches

Log-based CDC reads database transaction logs directly to identify changes without impacting the source database performance. This method accesses logs like MySQL’s binlog or PostgreSQL’s WAL (write-ahead log).

Transaction logs contain all database modifications in chronological order. CDC tools parse these logs to extract change events and convert them into usable data streams.

Key characteristics:

- MySQL CDC uses binary logs (binlog) to track changes

- PostgreSQL uses write-ahead logs (WAL) for change tracking

- No performance impact on source database

- Captures changes after transaction commits

Log-based approaches handle high-volume databases efficiently since they don’t interfere with normal database operations. However, transaction log formats vary between database vendors.

This pattern requires specialized tools that understand specific database log formats. The CDC system must also handle log retention and storage requirements.

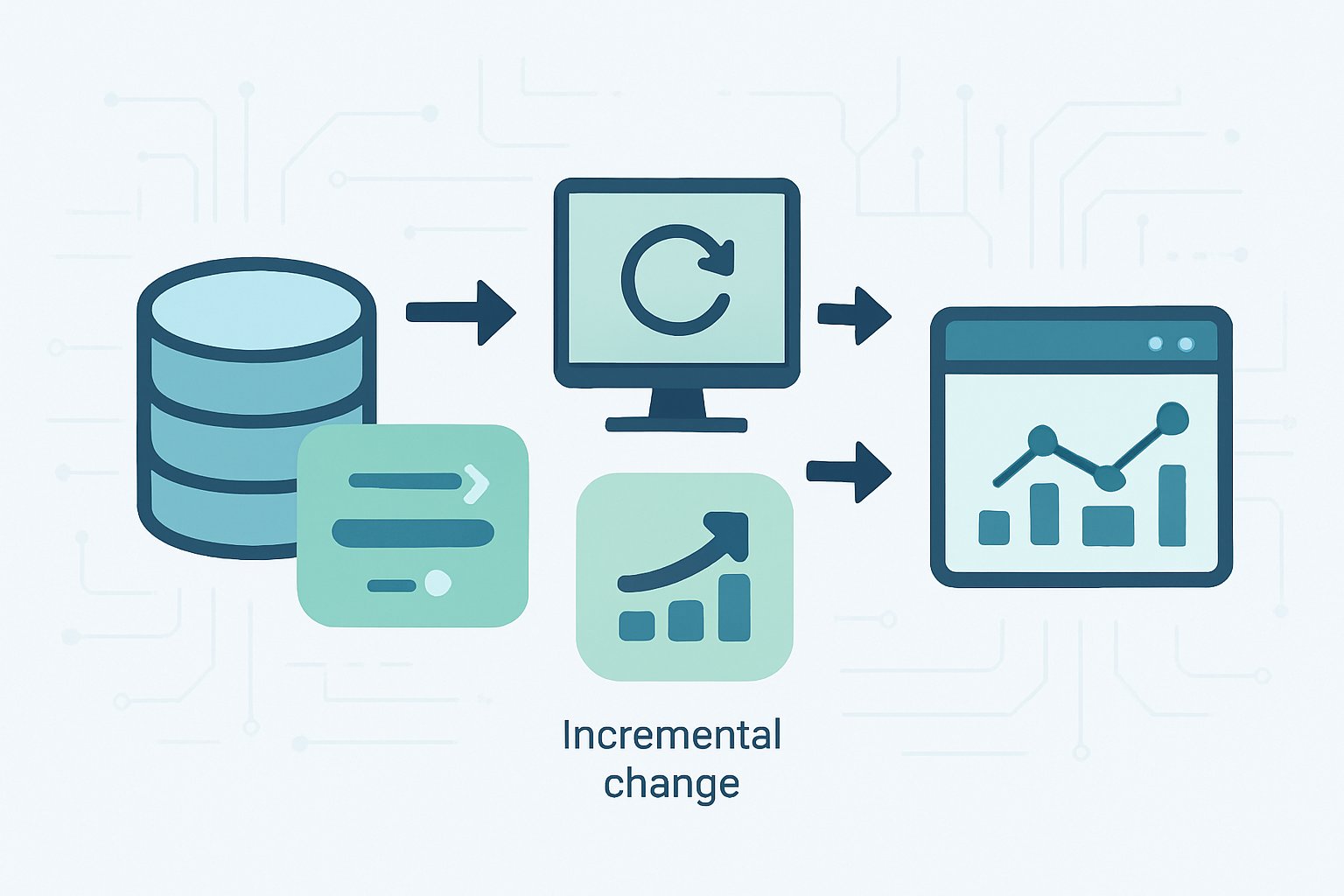

Timestamp and Query-Based CDC

Query-based CDC identifies changes by periodically scanning tables for modified records using timestamps or version columns. This approach compares current data snapshots with previous states to detect changes.

Timestamp-based methods rely on “last modified” columns to identify recently changed records. The CDC process queries for records with timestamps newer than the last processing time.

Implementation requirements:

- Tables must have timestamp or version columns

- Regular polling schedules determine change detection frequency

- Comparison logic identifies new, updated, or deleted records

This pattern works well for systems that can tolerate some delay in change detection. It requires minimal database setup but may miss rapid changes between polling intervals.

Query-based CDC generates database load during each scan. Large tables require careful indexing on timestamp columns to maintain acceptable performance during change detection queries.

Architecture of CDC-Enabled Analytics Pipelines

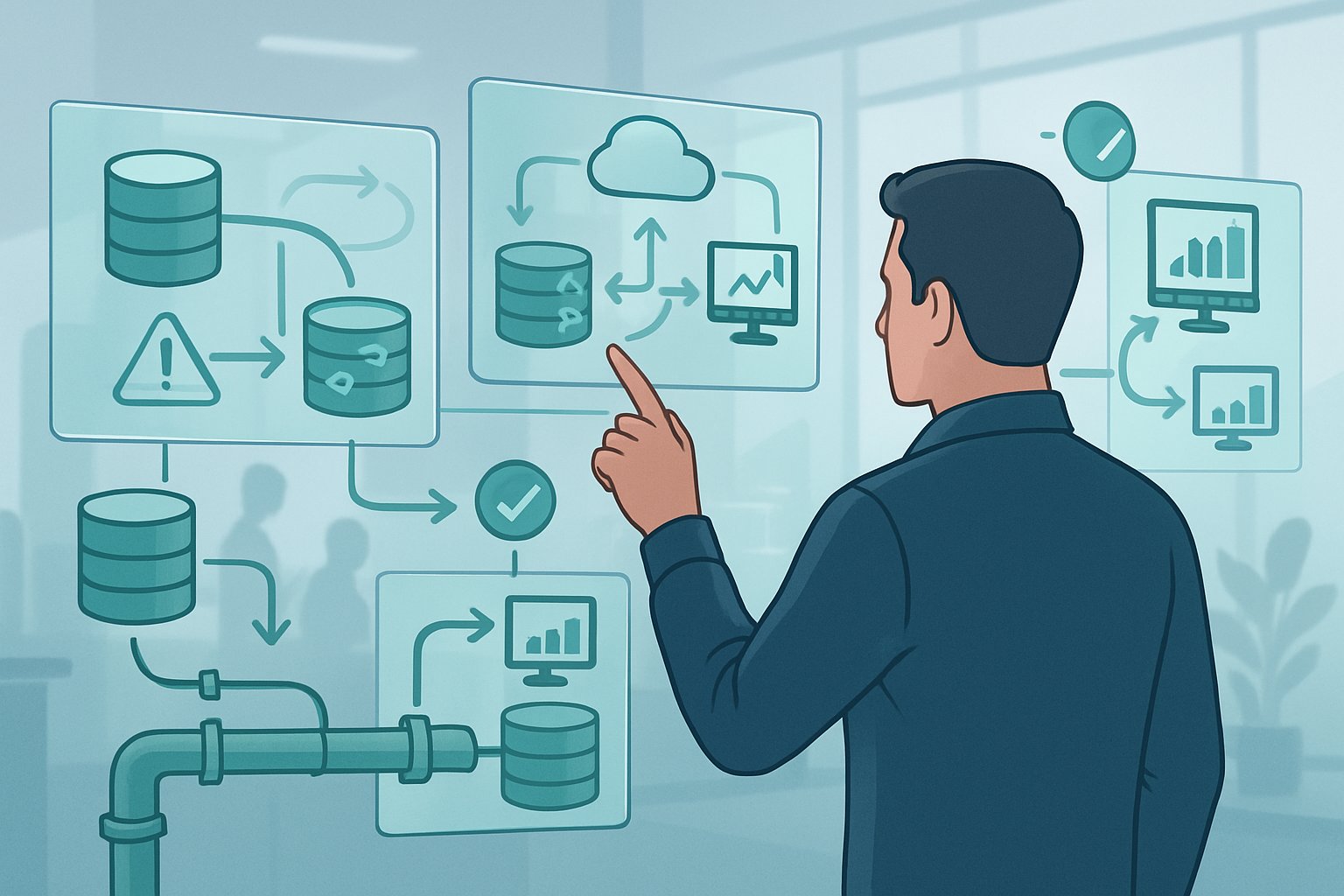

CDC architectures capture database changes in real-time and deliver them to analytics systems through structured data flows. Modern CDC pipelines support both data warehouses and data lakes with different architectural patterns optimized for each destination type.

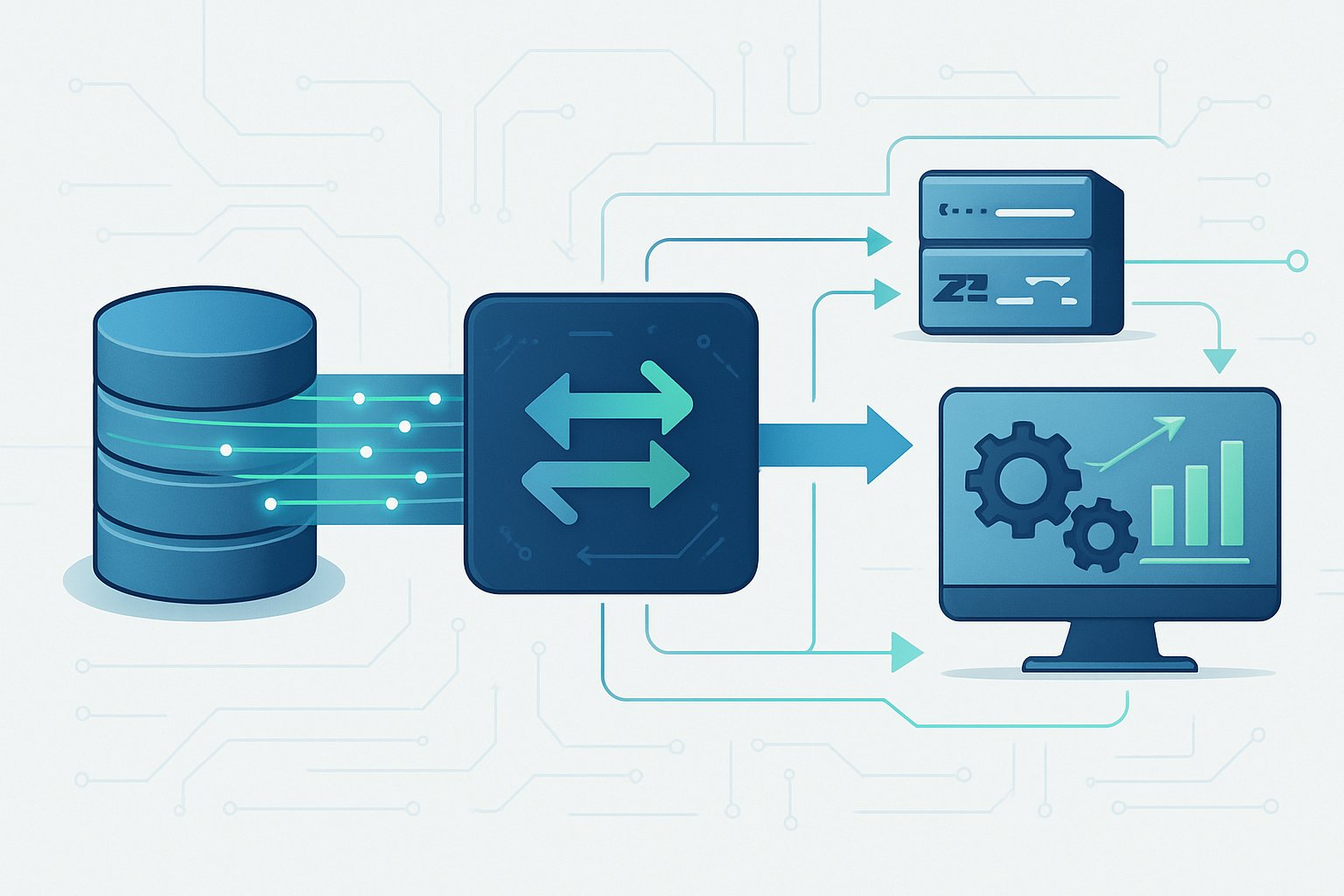

CDC Pipeline Components and Data Flow

A CDC pipeline consists of three main components that work together to move data from operational databases to analytics systems.

Change Capture Layer

The capture layer monitors transaction logs in operational databases. It reads write-ahead logs (WAL) or transaction logs to identify insert, update, and delete operations. This approach puts minimal load on source systems compared to query-based methods.

Processing and Transformation Layer

The middle layer processes captured changes before delivery. It handles schema evolution, data type conversions, and basic transformations. This layer also manages data buffering and ensures exactly-once delivery guarantees.

Delivery Layer

The final layer delivers processed changes to target systems. It supports both real-time streaming and micro-batch delivery modes. The delivery layer maintains metadata about processed changes and handles error recovery.

Data Flow Pattern

Changes flow from operational databases through the capture layer to the processing layer. The processing layer applies transformations and routes data to appropriate destinations. Multiple analytics systems can consume the same change stream without additional database load.

CDC Architecture for Data Warehouses

Data warehouse CDC architectures focus on maintaining consistent dimensional models and supporting analytical workloads with structured data patterns.

Staging Area Design

CDC pipelines typically use staging tables to land raw changes before processing. These staging areas buffer incoming changes and support batch loading into warehouse tables. The staging layer handles data validation and preliminary transformations.

Change Processing Patterns

Data warehouses use specific patterns to handle CDC data. Type 1 slowly changing dimensions overwrite existing records with new values. Type 2 dimensions create new records for each change while preserving history.

Loading Strategies

Most data warehouses prefer micro-batch loading over continuous streaming. CDC systems accumulate changes for 1-15 minutes before loading. This approach balances data freshness with warehouse performance optimization.

Schema Management

CDC architectures include automated schema evolution capabilities. When source database schemas change, the pipeline updates warehouse schemas automatically. This prevents pipeline failures and reduces maintenance overhead.

CDC Architecture for Data Lakes

Data lake CDC architectures emphasize flexibility and support for both structured and semi-structured data storage patterns.

File-Based Storage

CDC systems write changes to data lakes as files in formats like Parquet or Delta Lake. Each batch of changes creates new files with timestamps for organization. This approach supports time-travel queries and historical analysis.

Partitioning Strategies

Data lakes use partition schemes based on capture timestamps or business logic. Time-based partitions (hourly or daily) optimize query performance for recent data. Business partitions group related records together for analytical efficiency.

Merge Patterns

Data lakes implement upsert operations through merge patterns. Systems like Delta Lake and Apache Iceberg support ACID transactions for reliable updates. These patterns handle late-arriving data and out-of-order changes effectively.

Multi-Format Support

CDC architectures for data lakes support multiple file formats simultaneously. Raw changes might be stored in JSON for flexibility while processed data uses Parquet for performance. This multi-format approach serves different analytical use cases.

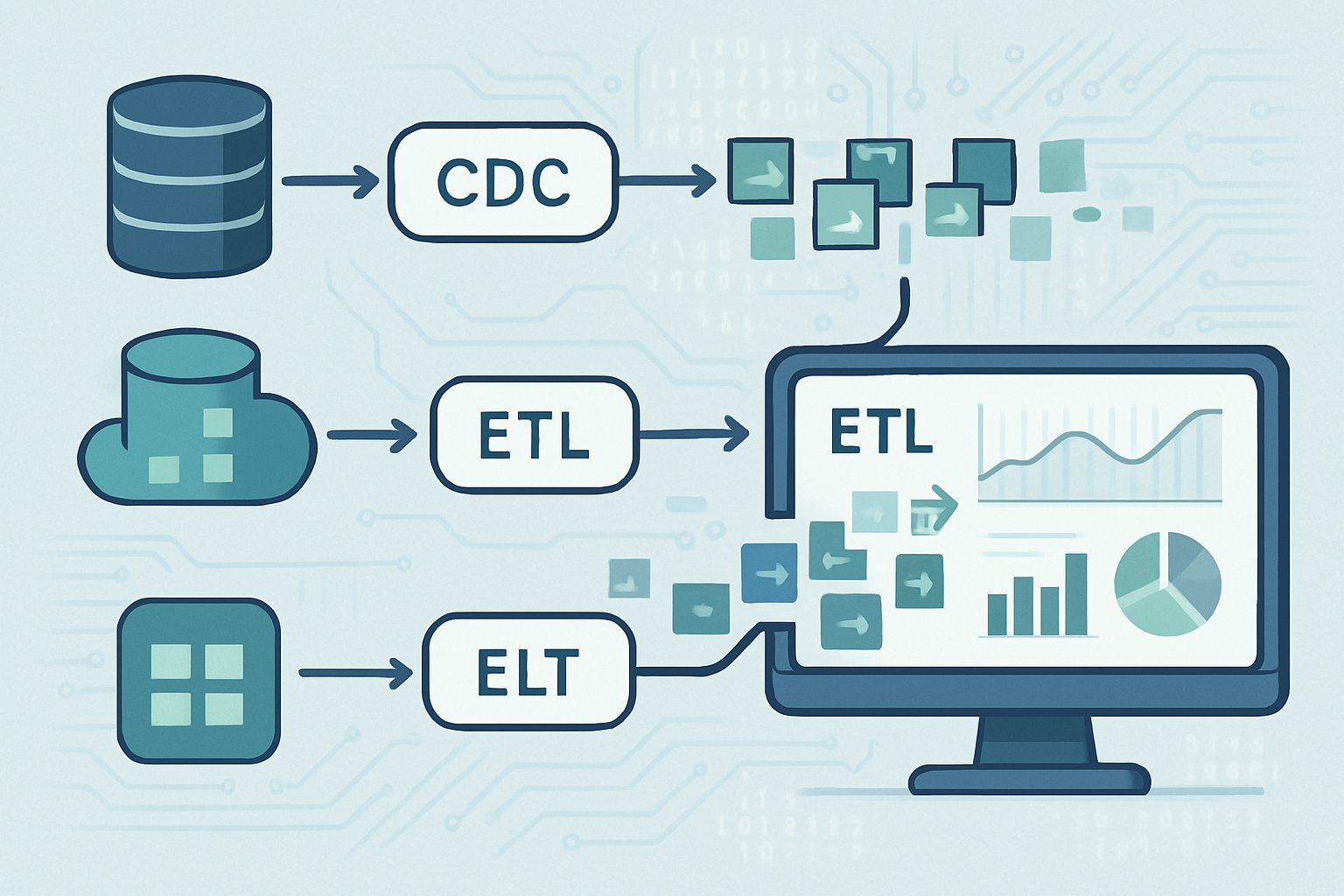

CDC in ETL and ELT Pipelines

CDC integrates differently with traditional batch-based ETL workflows compared to modern ELT architectures that leverage streaming technologies. The approach affects how data transformations occur and when changes reach target systems.

CDC with Traditional ETL Workflows

Traditional ETL workflows process CDC events in scheduled batches. The system extracts change events from source databases, transforms them in a staging area, then loads the processed data into target systems.

Batch processing typically runs on fixed schedules like hourly or daily intervals. This creates latency between when changes occur and when they appear in analytics systems.

The transformation step happens before loading data into the warehouse. Data engineers apply business rules, clean data, and join tables during this phase.

Key characteristics include:

- Scheduled execution at regular intervals

- Resource-intensive processing during batch windows

- Higher latency for data availability

- Complex error handling for failed batches

Many organizations still use this pattern for non-critical analytics where near real-time data is not required. The batch approach works well when transformation logic is complex or when target systems cannot handle continuous data streams.

CDC in Modern ELT and Streaming Environments

Modern ELT architectures load raw CDC events directly into data lakes or warehouses first. Stream processing engines then transform the data continuously as new events arrive.

Real-time data processing becomes possible with this approach. Systems like Apache Kafka capture CDC events and stream them to multiple downstream consumers simultaneously.

The transformation happens after loading raw data. This allows for more flexible data transformation since analysts can apply different business rules to the same source data.

Streaming architectures offer several advantages:

- Lower latency for fresh data availability

- Scalable processing that handles variable data volumes

- Event-driven transformations triggered by data arrival

- Multiple consumers can process the same CDC stream

Cloud platforms provide managed services that simplify streaming CDC implementations. These systems automatically handle scaling, fault tolerance, and data delivery guarantees that were complex to manage in traditional batch systems.

CDC Tools, Platforms, and Integrations

Modern analytics pipelines rely on specialized CDC tools and cloud platforms to capture data changes in real-time. These solutions integrate with streaming platforms like Apache Kafka and connect seamlessly to cloud data warehouses such as Snowflake and BigQuery.

Popular CDC Tools and Frameworks

Debezium leads the open-source CDC space as a distributed platform built on Apache Kafka. It monitors database transaction logs and captures changes from PostgreSQL, MySQL, MongoDB, and SQL Server.

Debezium connectors stream change events directly to Kafka topics. This enables real-time data processing without impacting source database performance.

Airbyte offers an AI-powered data integration platform with over 600 pre-built connectors. It uses Debezium as an embedded library for log-based CDC operations.

The platform supports both homogeneous and heterogeneous database migrations. Users can replicate data between identical systems or different database engines.

Kafka Connect provides a framework for streaming data between Apache Kafka and external systems. It includes CDC connectors that capture database changes and publish them to Kafka topics.

Commercial solutions like Fivetran offer managed CDC services with automated schema changes and error handling. These platforms reduce operational overhead but come with higher costs.

Integration with Streaming Platforms

Apache Kafka serves as the backbone for most CDC implementations in analytics pipelines. It handles high-throughput change streams and provides durable message storage.

Kafka’s distributed architecture ensures fault tolerance and scalability. Multiple consumers can process the same change events for different analytics use cases.

Kafka Connect simplifies integration by providing pre-built connectors for popular databases and data warehouses. It handles tasks like serialization, partitioning, and offset management automatically.

Stream processing frameworks like Apache Spark integrate with Kafka to transform CDC events before loading them into analytics systems. This enables real-time data enrichment and validation.

Many organizations combine Kafka with schema registries to manage data format evolution. This ensures compatibility as source systems change their data structures over time.

CDC in Cloud Analytics Ecosystems

Snowflake supports CDC through its Streams feature and third-party connectors. Users can capture changes from operational databases and load them into Snowflake tables automatically.

The platform integrates with tools like Airbyte and Fivetran for managed CDC pipelines. These connections handle schema evolution and data type mapping between source and target systems.

BigQuery offers CDC capabilities through Change Streams and external connector integrations. Google Cloud Dataflow processes CDC events and loads them into BigQuery tables.

Cloud platforms provide native CDC services that reduce infrastructure management overhead. AWS Database Migration Service and Azure Data Factory include built-in CDC functionality for their respective ecosystems.

Multi-cloud CDC strategies often use vendor-neutral tools like Debezium or Airbyte. This approach prevents vendor lock-in while maintaining flexibility across different cloud providers.

Best Practices and Challenges in CDC Implementation

Successful CDC implementation requires careful attention to data consistency, performance optimization for high-volume scenarios, and robust error handling mechanisms. Organizations must address these core areas to build reliable analytics pipelines that maintain data accuracy across distributed systems.

Ensuring Data Consistency and Integrity

Data consistency remains the most critical challenge in CDC implementations. Organizations must implement multiple layers of validation to maintain data integrity across source and destination systems.

Consistency Mechanisms

- Transaction log ordering ensures changes apply in correct sequence

- Checkpointing tracks last processed change to prevent data loss

- Conflict resolution handles simultaneous updates to same records

Distributed systems create additional complexity for database replication. Network delays can cause changes to arrive out of order at destination systems. CDC tools must implement ordering guarantees to maintain referential integrity.

Data Validation Strategies

- Hash-based verification compares source and target data checksums

- Row count validation ensures complete data transfer

- Schema validation prevents incompatible data types from causing failures

Regular audits help identify consistency gaps before they impact analytics. Organizations should schedule validation jobs to compare source and destination datasets periodically.

Scaling CDC for High-Velocity and Large Volume Data

High-velocity environments demand specialized approaches to handle massive data volumes without impacting source system performance. Scaling challenges intensify as transaction rates increase beyond thousands of changes per second.

Performance Optimization Techniques

- Parallel processing splits change streams across multiple workers

- Batch processing groups small changes to reduce network overhead

- Resource allocation dedicates sufficient memory and CPU for CDC operations

Log-based CDC methods prove most effective for large data volumes. These approaches read transaction logs without adding triggers or queries that slow source databases.

Infrastructure Considerations

- Message queues buffer changes during peak load periods

- Horizontal scaling distributes CDC workload across multiple nodes

- Connection pooling limits database connections to prevent resource exhaustion

Data management teams must monitor system metrics continuously. Memory usage, CPU utilization, and network throughput indicate when scaling becomes necessary.

Data Validation and Error Handling

Robust error handling prevents data pipeline failures from corrupting analytics datasets. Organizations must design CDC systems that gracefully handle various failure scenarios while maintaining data accuracy.

Common Error Scenarios

- Network timeouts during data transmission

- Schema changes that break downstream processing

- Duplicate records from retry mechanisms

- Missing data from source system failures

Error Recovery Mechanisms

Dead letter queues capture failed messages for manual review. Retry logic attempts reprocessing with exponential backoff to avoid overwhelming systems. Circuit breakers stop processing when error rates exceed thresholds.

Monitoring and Alerting

Real-time monitoring tracks CDC pipeline health through key metrics. Data lag measurements identify processing delays before they impact analytics. Error rate tracking helps teams respond quickly to system issues.

Teams should implement comprehensive logging to troubleshoot problems effectively. Detailed logs help identify root causes when data validation checks fail or replication stops working properly.

Advanced Patterns and Future Trends

Change Data Capture is evolving beyond basic replication to enable real-time anomaly detection, serverless architectures, and complex multi-source analytics. These advanced patterns eliminate traditional ETL bottlenecks while supporting diverse data formats and integration scenarios.

Real-Time Anomaly Detection Using CDC

CDC enables instant anomaly detection by streaming database changes to machine learning models. This pattern eliminates batch processing delays that can miss critical incidents.

Financial institutions use this approach to detect fraud within seconds of suspicious transactions. The CDC stream feeds real-time scoring engines that analyze patterns across multiple data sources.

Key implementation steps:

- Configure CDC to capture transaction tables

- Stream changes to anomaly detection models

- Set up automated alerts for threshold breaches

Manufacturing companies apply similar patterns to monitor equipment sensors. When sensor readings change in the database, CDC immediately triggers anomaly detection algorithms.

This pattern works best with high-frequency data changes. It requires low-latency messaging systems to maintain real-time performance across the analytics pipeline.

Emerging Zero-ETL and Serverless CDC Solutions

Zero-ETL architectures eliminate traditional extract, transform, and load processes by using CDC for direct data integration. Cloud providers now offer serverless CDC services that scale automatically.

AWS DMS and Azure Data Factory provide managed CDC without infrastructure management. These services handle different data formats including JSON, Avro, and Parquet automatically.

Benefits of serverless CDC:

- Cost efficiency – Pay only for actual data changes processed

- Auto-scaling – Handle traffic spikes without manual intervention

- Reduced complexity – No server management or capacity planning

Serverless patterns work well for variable workloads. Organizations can process millions of changes during peak hours without provisioning permanent infrastructure.

The zero-ETL approach connects operational databases directly to analytics systems. This eliminates data movement delays and reduces storage costs significantly.

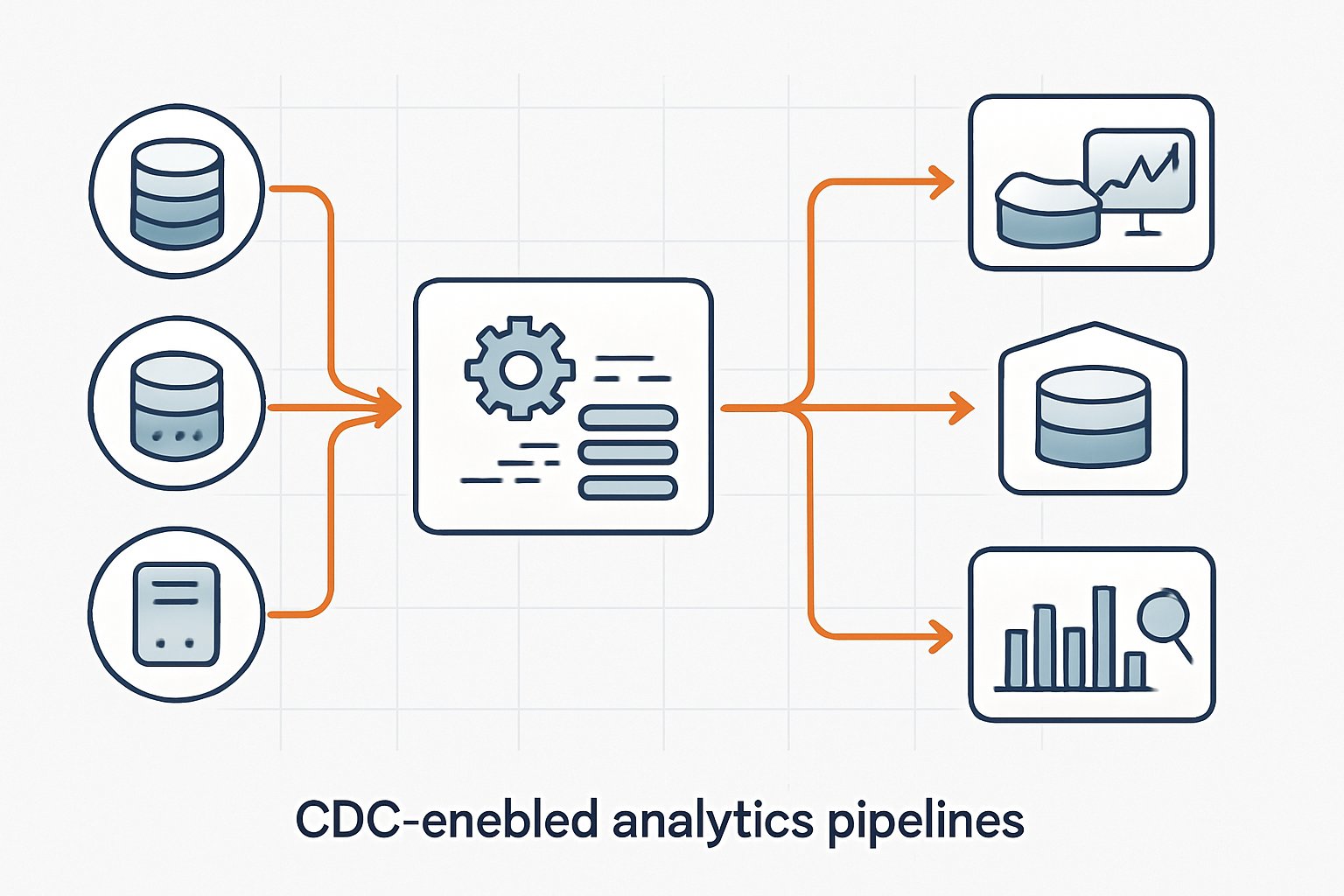

CDC for Multi-Source and Multi-Sink Analytics

Modern analytics requires data from multiple databases, applications, and external sources. CDC patterns now support complex routing between many sources and destinations.

Organizations combine CDC streams from PostgreSQL, MySQL, and MongoDB databases. The unified stream feeds data warehouses, real-time dashboards, and machine learning platforms simultaneously.

Common multi-source patterns:

- Database federation across different vendors

- Application data consolidation for 360-degree views

- Real-time data lake population from operational systems

Data integration challenges include handling different data formats and schema evolution. CDC solutions use schema registries to manage changes across multiple data sources.

Multi-sink patterns distribute the same CDC stream to various analytics tools. One change event might update a data warehouse, trigger a real-time alert, and train a machine learning model.

This approach reduces database load by capturing changes once and distributing them everywhere needed.

Frequently Asked Questions

Common questions about change data capture implementation focus on specific platform configurations, performance optimization, and choosing the right mechanisms for different database systems. These practical concerns address real-world challenges developers face when building analytics pipelines.

What are the best practices for implementing change data capture in analytics pipelines?

Log-based CDC provides the best performance for analytics pipelines. It reduces load on source databases and captures all change types without schema modifications.

Teams should implement proper error handling and retry mechanisms. CDC streams can experience connectivity issues or lag behind database changes.

Use dedicated messaging systems like Kafka to buffer changes between source and target systems. This prevents data loss when downstream systems are temporarily unavailable.

Configure appropriate batch sizes for CDC processing. Small batches provide lower latency while larger batches improve throughput for analytics workloads.

Monitor replication lag closely. Some databases crash when replication slots fill up due to slow consumers or connectivity problems.

How can one utilize Azure Data Factory for effective change data capture with SQL Server?

Azure Data Factory supports native CDC integration with SQL Server through built-in connectors. Users can configure these connectors to read from SQL Server change tables automatically.

The platform handles checkpoint management automatically. It tracks the last processed change and resumes from that point during subsequent runs.

Data Factory allows scheduling CDC pipelines at regular intervals. Teams can balance between real-time needs and system performance by adjusting frequency.

The service provides monitoring and alerting capabilities for CDC pipelines. This helps teams identify issues before they impact downstream analytics systems.

Integration with Azure Synapse enables direct loading of CDC data into analytics warehouses. This creates end-to-end pipelines without additional data movement steps.

What are the differences between change data capture mechanisms in Azure and Databricks?

Azure Data Factory focuses on managed CDC connectors with minimal setup requirements. It abstracts away infrastructure complexity but offers less customization.

Databricks provides more control over CDC implementation through Delta Live Tables and structured streaming. Users can customize processing logic and handle complex transformations.

Azure handles scaling automatically based on data volume. Databricks requires manual cluster configuration and optimization for CDC workloads.

Databricks excels at real-time processing with sub-second latency capabilities. Azure Data Factory typically operates on scheduled intervals with higher latency.

Cost models differ significantly between platforms. Azure charges per pipeline run while Databricks charges for compute resources consumed.

How do you configure change data capture for Oracle databases in Azure Data Factory?

Oracle CDC in Azure Data Factory requires enabling LogMiner or Oracle GoldenGate on the source database. The database administrator must configure these features before creating pipelines.

Users create linked services pointing to Oracle databases with appropriate credentials. The service account needs specific permissions to read transaction logs.

CDC datasets in Data Factory specify which tables to monitor for changes. Users can filter specific operations like inserts, updates, or deletes.

Pipeline activities read from CDC datasets and write to target systems. Common targets include Azure SQL Database, Synapse, or blob storage for analytics.

Checkpoint management ensures reliable processing across pipeline runs. Azure Data Factory automatically tracks progress and handles restarts gracefully.

What performance considerations should be taken into account when using change data capture in large-scale environments?

Network bandwidth becomes critical for high-volume CDC implementations. Teams should provision adequate connectivity between source databases and processing systems.

Source database performance can degrade if CDC consumers lag behind. Monitor replication slot sizes and consumer processing rates regularly.

Parallel processing improves throughput for large change volumes. Configure multiple consumers or partition data by table or key ranges.

Storage costs increase with CDC data retention. Implement lifecycle policies to archive or delete old change records based on business requirements.

Target system capacity must handle peak change volumes. Analytics databases need sufficient resources to process large batches of CDC data efficiently.

Can you explain the role of Microsoft’s service fabric in change data capture for analytics pipelines?

Microsoft Service Fabric provides container orchestration capabilities for CDC applications. It manages scaling and reliability of custom CDC processing services.

Service Fabric enables microservices architectures for CDC pipelines. Teams can deploy separate services for different databases or processing functions.

The platform handles service discovery and load balancing automatically. CDC services can communicate efficiently within the Service Fabric cluster.

Health monitoring and automatic failover improve CDC pipeline reliability. Service Fabric restarts failed services and redistributes workloads as needed.

Integration with Azure services allows hybrid CDC architectures. On-premises Service Fabric clusters can connect to cloud-based analytics platforms seamlessly.